AI Firms Mustnt Govern Themselves, Say Ex-OpenAI Board Members

Ai firms mustnt govern themselves say ex members of openais board – AI Firms Mustn’t Govern Themselves, Say Ex-OpenAI Board Members. That’s the stark warning coming from former members of OpenAI’s board, and it’s a statement that’s sent ripples through the tech world. Their concerns aren’t just about potential conflicts of interest; they’re about the very future of AI and its impact on society. This isn’t a simple debate about regulation versus deregulation; it’s a crucial discussion about who should hold the reins of this powerful technology and how we can ensure it’s used responsibly.

The former board members highlight the inherent dangers of allowing AI companies to self-regulate. Their argument centers on the potential for bias, the lack of accountability, and the significant risks associated with unchecked power in an industry developing technology with the potential to fundamentally reshape our world. They propose a need for independent oversight, drawing parallels with other industries where self-regulation has proven insufficient to address ethical concerns and safeguard public interest.

The stakes are high, and the call for external oversight is a pivotal moment in the ongoing conversation surrounding AI ethics and governance.

The Concerns of Former OpenAI Board Members

The recent departure of several prominent figures from OpenAI’s board has sparked a crucial conversation about the governance of artificial intelligence. These former board members have voiced significant concerns regarding the potential dangers of allowing AI companies to self-regulate, arguing that such an approach is inherently flawed and poses considerable risks to society. Their concerns highlight a critical need for external oversight and robust regulatory frameworks to ensure the responsible development and deployment of AI.The specific concerns raised by these former board members center on the potential for conflicts of interest inherent in self-regulation.

AI companies, driven by profit motives, may prioritize financial gain over ethical considerations, potentially leading to the development and deployment of AI systems that are biased, discriminatory, or even harmful. The lack of independent oversight could allow these companies to evade accountability for the negative consequences of their actions, leaving society vulnerable to the potential harms of unchecked AI advancement.

The OpenAI board drama highlights a crucial point: self-regulation in powerful industries rarely works. We need external oversight, just like the complex situation Europe faces with migration, as seen in this insightful article on whether a new pact of ten laws will help: will a new pact of ten laws help europe ease its migrant woes. The parallels are striking – both AI and migration require robust, independent frameworks to prevent unchecked power and ensure fair outcomes.

Leaving AI firms to police themselves is a recipe for disaster, mirroring the potential pitfalls of relying solely on internal solutions for multifaceted societal challenges.

Potential Risks of Self-Regulation in the AI Industry

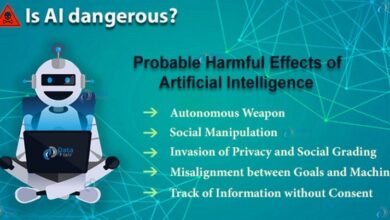

Self-regulation in the AI industry carries significant ethical risks. The potential for biased algorithms to perpetuate and amplify existing societal inequalities is a major concern. Without external scrutiny, AI companies might fail to adequately address issues of fairness, transparency, and accountability in their algorithms. This could lead to discriminatory outcomes in areas like loan applications, hiring processes, and even criminal justice, exacerbating existing social disparities.

Furthermore, the lack of transparency in AI development could make it difficult to identify and address biases, hindering efforts to ensure fairness and equity. The potential for misuse of AI technologies, such as in autonomous weapons systems or sophisticated surveillance technologies, also necessitates strong external oversight to prevent the development and deployment of harmful applications.

Examples of Self-Regulation Failures in Other Industries

History provides numerous examples of self-regulation failing to adequately address ethical concerns. The financial industry’s self-regulation before the 2008 financial crisis is a prime example. Banks and other financial institutions were largely responsible for regulating themselves, leading to lax oversight, risky practices, and ultimately, a devastating global economic downturn. Similarly, the tobacco industry’s history of downplaying the health risks of smoking, despite internal knowledge of the dangers, demonstrates how self-regulation can fail to prioritize public health and safety.

These instances highlight the limitations of relying solely on industry self-regulation to address ethical and societal concerns.

Comparison of Self-Regulation and External Oversight

The following table compares the potential benefits of self-regulation versus external oversight for AI firms:

| Feature | Self-Regulation | External Oversight |

|---|---|---|

| Responsiveness to industry needs | High; can adapt quickly to evolving technologies | Lower; regulations can lag behind technological advancements |

| Cost | Potentially lower initial costs for firms | Higher costs associated with regulatory bodies and enforcement |

| Accountability | Lower; potential for conflicts of interest and lack of transparency | Higher; independent oversight and enforcement mechanisms ensure accountability |

| Ethical Considerations | Potentially lower prioritization; profit motives may outweigh ethical concerns | Higher prioritization; regulations can be designed to address ethical concerns proactively |

The Need for External Oversight of AI Development

The recent concerns raised by former OpenAI board members highlight a critical issue: the potential dangers of allowing powerful AI technologies to be governed solely by the companies that develop them. Self-regulation, while potentially beneficial in certain aspects, is insufficient to guarantee the ethical and safe development and deployment of AI. Independent oversight is crucial to mitigate risks and ensure alignment with societal values and public good.The development of advanced AI systems presents unprecedented challenges.

These systems are rapidly evolving, impacting various sectors and potentially altering the very fabric of society. Without robust external oversight, the potential for misuse, bias, and unintended consequences is significantly amplified. This necessitates a proactive approach, establishing independent bodies capable of regulating this transformative technology.

Potential Roles and Responsibilities of Independent Oversight Bodies

Independent oversight bodies for AI development should possess a broad range of responsibilities, encompassing various stages of the AI lifecycle. These bodies need the authority to review algorithms for bias, assess the potential societal impact of AI systems before deployment, and enforce regulations effectively. They must also be equipped to investigate complaints, impose penalties for violations, and promote transparency and accountability within the AI industry.

The news about ex-OpenAI board members warning against self-regulation in AI firms got me thinking about accountability. It’s a similar issue to the one highlighted in this article about the Democratic convention, the democratic convention hopes to keep kamalamentum going , where internal pressures and potential conflicts of interest are also a major concern. Ultimately, both situations emphasize the need for strong external oversight to prevent unchecked power, whether it’s in the tech world or politics.

Furthermore, these bodies should actively engage in research to understand emerging AI capabilities and anticipate future risks. Their roles extend beyond simple reactive measures; they should be proactive in shaping the ethical and responsible development of AI. Enforcement mechanisms could include fines, licensing restrictions, and even temporary or permanent bans on specific AI technologies deemed excessively risky.

Examples of Existing Regulatory Frameworks for Emerging Technologies

Several existing regulatory frameworks for other emerging technologies offer valuable precedents for AI regulation. For example, the pharmaceutical industry is heavily regulated by bodies like the FDA (Food and Drug Administration) in the United States, which mandates rigorous testing and approval processes before new drugs can reach the market. Similarly, the aviation industry is subject to strict safety regulations overseen by bodies like the FAA (Federal Aviation Administration).

These examples demonstrate the importance of independent oversight in managing high-risk technologies and protecting the public interest. The regulatory approaches adopted in these sectors, particularly concerning risk assessment, testing procedures, and enforcement mechanisms, can inform the development of a robust AI regulatory framework.

A Hypothetical Structure for an Independent AI Regulatory Body

A hypothetical independent AI regulatory body could be structured as a multi-stakeholder commission, comprising experts from diverse fields including computer science, ethics, law, social sciences, and public policy. This composition ensures a balanced perspective and avoids the dominance of any single interest group. The commission would have the authority to set standards for AI development, conduct audits of AI systems, and investigate potential violations.

Its powers should include the ability to issue warnings, impose fines, and even ban the use of certain AI technologies if deemed necessary to protect public safety or prevent societal harm. Crucially, however, its limitations should be clearly defined to prevent overreach and stifle innovation. Transparency and public accountability mechanisms would be essential, ensuring the commission’s decisions are subject to public scrutiny and review.

This body could also foster collaboration between researchers, developers, and policymakers, facilitating a continuous improvement process.

The news about ex-OpenAI board members warning against self-regulation for AI firms got me thinking about corporate governance in general. It’s fascinating to compare that to the complexities of Japanese business, as highlighted in this article about the 7-Eleven takeover offer: what a takeover offer for 7 eleven says about business in japan. Both situations underscore the need for external oversight and accountability to prevent potential abuses of power, whether it’s in the rapidly evolving AI sector or established industries.

Potential Conflicts of Interest in Self-Regulation: Ai Firms Mustnt Govern Themselves Say Ex Members Of Openais Board

The debate surrounding AI regulation is heating up, and for good reason. The power wielded by the leading AI firms is unprecedented, and the potential for misuse is immense. Allowing these companies to self-regulate creates a significant risk of conflicts of interest that could undermine safety and ethical considerations. This self-regulation model essentially puts the fox in charge of the henhouse, creating a situation ripe for exploitation and neglect.Self-regulation of AI development by the companies themselves presents inherent challenges.

The profit motive often overshadows other considerations, leading to decisions that prioritize financial gain over ethical implications or robust safety protocols. This inherent conflict of interest is exacerbated by the lack of transparency often found within these powerful organizations, making it difficult for external parties to assess the true extent of the risks involved. A lack of independent oversight can easily lead to a situation where the companies’ self-reported safety measures are insufficient, inaccurate, or simply nonexistent.

Bias in Self-Regulation versus External Regulation

Self-regulation inherently carries a higher risk of bias compared to external oversight. AI systems are trained on data, and if that data reflects existing societal biases, the resulting AI will likely perpetuate and even amplify those biases. When a company regulates itself, there’s a strong incentive to downplay or ignore the potential for bias in their own products, as acknowledging such biases could negatively impact their market share and profitability.

External regulators, on the other hand, can bring an objective perspective and apply standards that address bias more comprehensively. For instance, an externally regulated AI recruitment tool might be required to undergo rigorous testing to ensure it doesn’t discriminate against specific demographics, a step a company might skip in self-regulation to avoid potentially slowing down product release.

Examples of Conflicts of Interest Leading to Inadequate Safety Measures

Consider a hypothetical scenario where an AI firm develops a powerful autonomous driving system. In a self-regulated environment, the company might prioritize a faster release date, even if it means compromising on safety features to cut costs or meet a deadline. This could lead to accidents and injuries that would likely be avoided under more rigorous external oversight.

Similarly, a company developing AI for facial recognition might downplay the potential for inaccuracies and biases that disproportionately affect certain racial groups if they are solely responsible for assessing their technology’s impact. The lack of external scrutiny could result in widespread discriminatory practices. The financial incentives to prioritize speed and market dominance over thorough safety testing and ethical considerations are simply too great in a self-regulated system.

Potential Safeguards to Mitigate Conflicts of Interest

Several safeguards could help mitigate the risks associated with self-regulation. Independent audits conducted by third-party experts could provide an external check on a company’s self-reported safety measures. These audits should be rigorous, transparent, and publicly accessible. Furthermore, legally binding regulations with clear penalties for non-compliance are crucial. These regulations should cover a wide range of ethical and safety concerns, including data privacy, bias mitigation, and transparency in algorithms.

Finally, the establishment of an independent regulatory body with the authority to investigate and penalize companies for violating these regulations is essential to ensure accountability. This body would need the resources and expertise to effectively monitor the industry and enforce regulations. These measures, taken together, offer a more robust framework than relying solely on the good faith of the companies themselves.

The Impact on Innovation and Competition

The debate surrounding AI regulation is inextricably linked to its potential impact on innovation and the competitive landscape. While concerns about safety and ethical implications are paramount, the economic and technological ramifications of different regulatory approaches cannot be ignored. A heavy-handed regulatory framework could stifle progress, while a laissez-faire approach might exacerbate existing inequalities and risks. Finding the right balance is crucial.External regulation, while potentially slowing the immediate pace of AI development, can ultimately foster a more sustainable and responsible innovation ecosystem.

Self-regulation, on the other hand, risks prioritizing the interests of established players, potentially hindering the entry of smaller, more innovative companies.

External Regulation’s Effect on the Pace of AI Innovation

The introduction of external regulations often leads to increased compliance costs and bureaucratic hurdles for AI companies. This can slow down the development cycle for new AI products and services, particularly for smaller firms with limited resources. However, a well-designed regulatory framework can also provide clarity and predictability, reducing uncertainty and encouraging long-term investment in research and development. For instance, clear standards for data privacy and security can reduce the time and resources spent on addressing potential legal challenges, allowing companies to focus on innovation.

Conversely, a lack of clear guidelines can lead to a “wait-and-see” approach, slowing overall progress.

Self-Regulation versus External Regulation on Competition

Self-regulation, primarily driven by industry consortiums or individual companies, often lacks the necessary transparency and accountability to ensure fair competition. Larger, well-established companies might have a disproportionate influence on the development of self-regulatory guidelines, potentially creating barriers to entry for smaller competitors. External regulation, on the other hand, can create a level playing field by establishing minimum standards that all companies must meet, fostering a more equitable competitive environment.

This can encourage the emergence of innovative startups that might otherwise be disadvantaged by the dominance of larger players.

Regulatory Models and Specific AI Applications

Different regulatory models will inevitably have varying impacts on the development of specific AI applications. For example, the development of autonomous vehicles will likely be heavily influenced by safety regulations, requiring rigorous testing and certification processes before deployment. These regulations, while potentially slowing down the initial rollout, are crucial for public safety and acceptance. Conversely, the regulation of facial recognition technology might focus on issues of bias and privacy, requiring algorithmic transparency and accountability mechanisms.

A less stringent regulatory approach in this area could lead to widespread misuse and potential for discrimination.

A Well-Designed Regulatory Framework for Responsible Innovation, Ai firms mustnt govern themselves say ex members of openais board

A well-designed regulatory framework can effectively balance the need for responsible innovation with the promotion of competition. This requires a nuanced approach that:

- Prioritizes safety and ethical considerations without unduly burdening innovation.

- Establishes clear and transparent standards that are consistently applied across the industry.

- Encourages collaboration between regulators, industry stakeholders, and researchers to develop adaptable and effective regulations.

- Provides incentives for responsible AI development, such as tax breaks for companies investing in ethical AI practices.

- Supports the development of open-source AI tools and datasets to foster innovation and collaboration.

Public Trust and Transparency in AI

The rapid advancement of artificial intelligence (AI) presents incredible opportunities, but also significant risks. For AI to truly benefit society, public trust is paramount. Without it, widespread adoption will be hampered, and the potential for misuse and harm significantly increases. Building and maintaining this trust requires a commitment to transparency and accountability in every stage of AI development and deployment.Self-regulation, while seemingly efficient, often falls short in fostering this crucial public trust.

The perception of potential conflicts of interest, where companies prioritize profit over safety or ethical considerations, can severely erode public confidence. This lack of independent oversight can lead to a “trust deficit,” making people hesitant to embrace AI technologies, even those with immense potential for good.

The Importance of Public Trust in AI Development

Public trust is essential for the successful integration of AI into various aspects of our lives. Without it, governments and individuals may be reluctant to adopt AI-powered systems in healthcare, finance, transportation, and other critical sectors. This hesitancy can stifle innovation and limit the positive impact AI could have on society. A lack of trust can also lead to increased regulatory scrutiny, potentially hindering the progress of beneficial AI technologies.

Conversely, high public trust encourages investment, collaboration, and the acceptance of AI solutions, accelerating progress and maximizing societal benefits.

The Impact of Self-Regulation on Public Perception of AI

Self-regulation, while offering the potential for agility and industry-specific expertise, often lacks the impartiality and transparency necessary to build public trust. The absence of external oversight can lead to the perception of bias, favoritism, and a lack of accountability. Companies might be tempted to downplay risks or exaggerate benefits to protect their interests, further eroding public confidence. This lack of independent verification can fuel public skepticism and ultimately hinder the acceptance of AI technologies.

For example, if a self-regulated AI system makes a significant error with potentially harmful consequences, the lack of external investigation could amplify public distrust.

Strategies for Promoting Transparency and Accountability in AI Development

Several strategies can effectively promote transparency and accountability in AI development. These include the establishment of independent auditing bodies to verify the safety and fairness of AI systems, the implementation of clear and accessible guidelines for AI development and deployment, and the proactive disclosure of data and algorithms used in AI systems. Furthermore, promoting open-source AI development can foster greater scrutiny and collaboration, encouraging a more transparent and accountable environment.

Finally, the development of standardized explainability methods for AI decision-making can help build public understanding and trust.

Visual Representation of Transparency, Accountability, and Public Trust in AI

Imagine a three-legged stool representing public trust in AI. Each leg represents a crucial element: transparency (clearly visible and understandable AI systems and processes), accountability (mechanisms for addressing errors and biases), and public engagement (open communication and dialogue between developers and the public). If any one leg (transparency, accountability, or public engagement) is weak or missing, the stool – representing public trust – becomes unstable and prone to collapse.

A strong and stable stool requires all three legs to be equally robust and interconnected.

The debate surrounding self-regulation within the AI industry is far from over. The concerns raised by former OpenAI board members underscore a critical need for careful consideration of how we govern the development and deployment of AI. While innovation is crucial, so is responsible development. The path forward likely involves a collaborative effort between industry leaders, policymakers, and the public to create a robust regulatory framework that fosters innovation while mitigating the inherent risks.

It’s a complex challenge, but one we must face head-on to ensure a future where AI benefits humanity as a whole.