A Battle Is Raging Over Open Source AI

A battle is raging over the definition of open source ai – A battle is raging over the definition of open-source AI, and it’s a fight worth watching. The very core of what constitutes “open” in the context of artificial intelligence is being fiercely debated, with implications far beyond the technical realm. This clash involves developers, researchers, corporations, and even governments, all grappling with the complex ethical, economic, and political ramifications of this rapidly evolving technology.

The stakes are high, as the future of AI – and perhaps even our society – hangs in the balance.

This isn’t just a semantic argument; it’s a struggle over control, access, and the very nature of innovation. On one side, we have the proponents of completely open and collaborative development, believing that freely sharing AI models and data will accelerate progress and democratize access to powerful technology. On the other, concerns about misuse, the potential for malicious actors to exploit open-source AI, and the economic interests of companies invested in proprietary models fuel a push for tighter control and restricted access.

The resulting tension is shaping the landscape of AI development in profound ways.

The Contested Aspects of Open Source AI

The seemingly straightforward concept of “open-source AI” is surprisingly fraught with complexity and disagreement. While the ideal of freely available AI models and algorithms sounds utopian, the reality is far more nuanced, with several key areas of contention impacting its definition, implementation, and ethical implications. These disputes stem from fundamental disagreements about data ownership, community governance, and the potential societal impacts of this rapidly evolving technology.

Data Accessibility and Usage Rights

The core of the open-source AI debate often revolves around data. Open-source projects require substantial datasets for training models, but the acquisition and usage of these datasets are frequently problematic. Many datasets are proprietary, requiring licensing agreements that may clash with the open-source ethos. Even publicly available datasets often contain biases or sensitive information, raising concerns about privacy and potential misuse.

The debate centers on whether access to training data should be completely unrestricted, subject to specific licenses (like Creative Commons), or even kept entirely private, with only the trained model released openly. This leads to questions about the reproducibility of results and the potential for inequitable access to powerful AI tools. For example, a large language model trained on a dataset heavily skewed towards English-language texts will likely perform poorly on other languages, highlighting the challenges of ensuring equitable access to data and preventing the reinforcement of existing biases.

Community Governance and Contribution Models

Different open-source AI projects adopt diverse governance structures, leading to further contention. Some projects operate under a centralized model, with a core team making key decisions. Others employ more decentralized approaches, relying on community consensus or a meritocratic system based on contributions. The level of control exerted by the initial developers or maintainers significantly impacts the project’s direction, its inclusivity, and its ability to adapt to changing needs and demands.

Disagreements often arise regarding the criteria for contributions, the resolution of conflicts, and the overall decision-making process. For instance, a project governed by a small, homogeneous group might struggle to address biases embedded in the algorithms or to respond effectively to the needs of a diverse user base. In contrast, a truly decentralized model can face challenges in maintaining efficiency and preventing fragmentation.

Conflicts and Controversies in Open-Source AI Development

Several high-profile examples illustrate the challenges inherent in open-source AI. The development of powerful AI models capable of generating deepfakes or malicious code highlights the potential for misuse. While the open-source nature allows for scrutiny and potential mitigation of such risks, it also facilitates broader access to these tools, potentially amplifying negative consequences. Controversies have arisen regarding the ethical implications of certain applications, such as the use of facial recognition technology or the potential for biased algorithms to perpetuate societal inequalities.

Furthermore, the lack of clear guidelines or regulations surrounding the development and deployment of open-source AI adds to the uncertainty and increases the risk of unintended negative outcomes. The debate about the responsibility of developers and users in mitigating these risks remains a central point of contention.

The fight over the definition of open-source AI is intense, mirroring other ideological battles. It’s reminiscent of the current political climate, where, as reported by fbi singles out conservative agents in purge retaliates against whistleblowers gop lawmakers , power struggles impact even core government functions. This internal conflict highlights how deeply contested definitions, whether in tech or politics, can be.

Ultimately, the AI debate’s outcome will heavily influence future technological development.

Ethical Concerns Related to Open-Source AI

Before listing potential ethical concerns, it’s crucial to understand that the open-source nature of AI itself isn’t inherently good or bad; it’s the applications and how they are developed and deployed that create ethical challenges. The following concerns are not exhaustive but highlight key areas demanding careful consideration:

- Bias and Discrimination: Open-source AI models trained on biased data can perpetuate and amplify existing societal biases, leading to unfair or discriminatory outcomes.

- Privacy Violations: The use of personal data in training open-source AI models raises serious privacy concerns, especially if data is not properly anonymized or secured.

- Misinformation and Malicious Use: The accessibility of open-source AI tools can be exploited to create and spread misinformation, generate deepfakes, or develop malicious software.

- Lack of Transparency and Accountability: The decentralized nature of some open-source projects can make it difficult to trace the origins of biases or to hold developers accountable for harmful outcomes.

- Environmental Impact: The computational resources required to train large open-source AI models can have a significant environmental impact, contributing to carbon emissions.

- Job Displacement: The automation potential of open-source AI raises concerns about job displacement in various sectors.

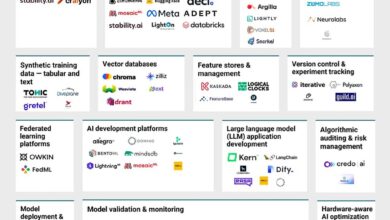

The Role of Large Language Models (LLMs)

Large Language Models (LLMs) are at the heart of the current open-source AI debate. Their ability to generate human-quality text, translate languages, and answer questions has sparked significant interest, but also raised crucial questions about accessibility, control, and potential societal impact. The very nature of these powerful tools makes them a key battleground in the fight over the future of AI.The significance of LLMs in the open-source AI debate stems from their potential to democratize access to advanced AI capabilities.

Historically, powerful AI models have been largely confined to large corporations and research institutions due to the significant computational resources and expertise required for their development and deployment. Open-sourcing LLMs could potentially level the playing field, allowing smaller organizations, researchers, and even individuals to leverage their capabilities.

Challenges in Open-Sourcing LLMs

Making LLMs truly open-source and accessible presents considerable challenges. The sheer size of these models demands substantial computational resources for training and inference, creating a barrier to entry for many. Furthermore, ensuring the responsible and ethical use of LLMs requires careful consideration of potential biases embedded in the training data and the mitigation of risks associated with malicious applications.

Finally, effective open-source collaboration necessitates robust community governance and ongoing maintenance, which are not always easy to achieve. The open-source community needs to address issues of data licensing, model versioning, and continuous improvement to make these powerful tools truly accessible.

The fight over what constitutes “open source” AI is intense, with implications far beyond the tech world. This reminds me of the political turmoil surrounding transparency, like the situation in Arizona where, as reported by arizonas cochise county sued twice after voting to delay election certification , the lack of timely information sparked legal challenges. Ultimately, both situations highlight the crucial need for clear definitions and accountable processes, whether it’s in AI development or election administration.

Comparison of Open-Source and Closed-Source LLMs

Open-source LLMs, while often lagging behind their closed-source counterparts in terms of raw performance on benchmark tasks, offer advantages in terms of transparency, customization, and community-driven development. Closed-source LLMs, developed by companies like Google and OpenAI, benefit from significant investment in computational resources and expertise, leading to superior performance in many areas. However, their closed nature limits scrutiny and restricts customization possibilities.

The open-source approach, while potentially less performant initially, allows for community-driven improvements and adaptation to specific needs, potentially leading to specialized models surpassing closed-source solutions in niche applications. For example, a smaller, open-source model focused on medical transcription might outperform a larger, general-purpose closed-source model in that specific domain.

The fight over what constitutes “open source” in AI is intense, a clash of philosophies mirroring other crucial debates. It’s almost as complex as figuring out how to transition away from fossil fuels, a challenge brilliantly addressed in this article on how to end coal. Understanding the nuances of open-source AI is equally critical, as its future will significantly impact technological development and accessibility.

Potential Implications of Widespread Adoption

The widespread adoption of open-source LLMs could have profound implications across various sectors. Increased accessibility could spur innovation by empowering researchers and developers with previously unavailable tools. It could also foster the development of more diverse and inclusive AI systems, as community contributions could address biases and limitations present in existing models. However, it also raises concerns about the potential for misuse, including the generation of misinformation, the creation of sophisticated phishing campaigns, and the automation of harmful tasks.

Careful consideration of these risks and the development of appropriate safeguards are crucial for responsible implementation.

Hypothetical Scenario: Open-Source LLMs in Healthcare

Imagine a scenario where an open-source LLM is trained on a vast dataset of anonymized medical records, specifically focusing on rare diseases. This model could then be used by smaller clinics and hospitals with limited resources to improve diagnostic accuracy and personalize treatment plans for patients with these rare conditions. By making the model open-source, the community can continuously improve its accuracy and expand its knowledge base, benefiting patients worldwide.

This contrasts with a scenario where access to such a powerful diagnostic tool is restricted to large healthcare corporations with the resources to utilize proprietary closed-source LLMs. The open-source approach could lead to more equitable access to advanced healthcare technologies and significantly improve patient outcomes in underserved communities.

Economic and Political Implications

The rise of open-source AI presents a complex tapestry of economic and political ramifications, impacting industries, nations, and the very fabric of global power dynamics. Its potential to disrupt existing markets and reshape geopolitical landscapes is undeniable, demanding careful consideration of its implications. The speed of development and the decentralized nature of open-source projects make traditional regulatory approaches challenging, highlighting the need for innovative and collaborative solutions.The economic impact of open-source AI is multifaceted and far-reaching.

Its accessibility democratizes AI technology, empowering smaller companies and individuals to compete with larger corporations that previously held a monopoly on advanced AI capabilities. This could lead to increased innovation and competition across various sectors, from healthcare and finance to manufacturing and transportation. However, it also presents challenges, potentially leading to job displacement in certain sectors and requiring significant investment in workforce retraining and adaptation.

Economic Impacts on Various Industries

Open-source AI’s influence varies across industries. In healthcare, it could accelerate the development of diagnostic tools and personalized medicine, reducing costs and improving accessibility. Finance could see increased efficiency in fraud detection and risk management. Manufacturing may experience automation breakthroughs, leading to increased productivity but also potential job losses in manual labor. The agricultural sector could benefit from improved precision farming techniques, optimizing resource use and boosting yields.

However, these benefits are not uniformly distributed, and some industries may face steeper challenges in adapting to this technological shift than others. For example, industries heavily reliant on proprietary AI solutions may find themselves at a competitive disadvantage unless they quickly integrate open-source tools.

Geopolitical Implications of Open-Source AI

The open nature of open-source AI presents both opportunities and risks on the geopolitical stage. The ease of access and distribution could empower smaller nations and even non-state actors, potentially leveling the playing field in technological competition. However, this also raises concerns about the potential misuse of the technology for malicious purposes, such as the development of autonomous weapons systems or the spread of misinformation.

The global distribution of open-source AI necessitates international cooperation to establish ethical guidelines and safety protocols, preventing the technology from being weaponized or used to undermine national security. The lack of centralized control makes this a significant challenge. For example, the development of powerful LLMs in a decentralized manner could lead to unpredictable outcomes and potential instability if not carefully managed.

The Role of Governments and Regulatory Bodies

Governments and regulatory bodies face the critical task of navigating the complex landscape of open-source AI. A balanced approach is crucial, fostering innovation while mitigating potential risks. This involves establishing clear guidelines on data privacy, algorithmic transparency, and responsible AI development. International collaboration is essential to create consistent standards and prevent regulatory arbitrage, where companies move to jurisdictions with less stringent regulations.

The challenge lies in creating regulations that are both effective and adaptable to the rapid pace of technological advancement in this field. Examples of successful regulatory frameworks in other technological domains could offer valuable insights, but the unique characteristics of open-source AI necessitate a tailored approach.

Comparative Approaches to Regulating Open-Source AI

Different countries are adopting diverse approaches to regulating open-source AI. Some favor a more laissez-faire approach, prioritizing innovation and minimizing government intervention. Others opt for stricter regulations, emphasizing safety and ethical considerations. The European Union, for instance, is developing comprehensive AI regulations, including provisions for open-source AI. Meanwhile, other nations may prioritize national security concerns, leading to more restrictive policies on the distribution and use of certain AI technologies.

These varying approaches highlight the complexities of achieving global consensus on open-source AI governance. A comparative analysis of these approaches can provide valuable insights for developing effective and globally consistent regulatory frameworks.

Visual Representation: The Interplay of Economic and Political Forces

Imagine a complex web. At the center is a large sphere representing “Open-Source AI.” Radiating outwards are numerous interconnected strands. Some strands, colored green, represent positive economic impacts: increased innovation, job creation in new sectors, and economic growth in developing nations. Others, colored red, depict negative economic consequences: job displacement, widening economic inequality, and potential market disruption.

Intertwined with these economic strands are political forces represented by blue strands: government regulations, international collaborations, national security concerns, and the potential for misuse. The interactions between the strands show a dynamic interplay; for instance, a strong green strand (increased innovation) might stimulate a stronger blue strand (government investment in AI research), while a strong red strand (job displacement) might trigger a stronger blue strand (government-led retraining programs).

The overall image conveys the intricate and often unpredictable relationship between economic and political factors in shaping the future of open-source AI. The strength and direction of each strand are constantly shifting, reflecting the ever-evolving nature of this technological landscape.

The Future of Open Source AI: A Battle Is Raging Over The Definition Of Open Source Ai

The rapid advancements in artificial intelligence, particularly in the realm of large language models (LLMs), are fundamentally reshaping the technological landscape. The open-source movement plays a crucial role in this evolution, democratizing access to cutting-edge AI technologies and fostering innovation through collaborative development. Understanding the future trajectory of open-source AI is critical for navigating this transformative era. We can anticipate several key trends shaping its development and adoption over the next decade.

Open-source AI is poised for exponential growth, driven by a confluence of factors including increased community participation, the development of more efficient training methodologies, and the maturation of foundational models. This growth will not be without its challenges, however, including the need for robust governance models, ethical considerations, and addressing potential biases inherent in the data used to train these models.

Potential Future Trends in Open-Source AI Development and Adoption, A battle is raging over the definition of open source ai

The future of open-source AI will likely witness a shift towards more specialized and modular models. Instead of monolithic LLMs, we’ll see a rise in smaller, more efficient models tailored for specific tasks and domains. This modularity will allow for easier customization, faster training times, and reduced computational resources. Think of it like Lego bricks – individual components can be combined and recombined to create diverse and powerful AI systems.

This will also make open-source AI more accessible to smaller organizations and individuals who may lack the resources to train large-scale models. We can expect to see a proliferation of open-source tools and libraries designed to simplify the process of building, deploying, and managing these modular AI systems. This trend mirrors the success of modular software development, leading to increased flexibility and innovation.

Potential Technological Advancements Impacting the Open-Source AI Landscape

Several technological advancements are likely to significantly impact the open-source AI landscape. One key area is the development of more efficient training algorithms and hardware. Advances in areas like federated learning, which allows training models on decentralized datasets without compromising data privacy, will be critical. Furthermore, breakthroughs in hardware, such as specialized AI accelerators, will make training and deploying large models more feasible for a wider range of users and organizations.

Improvements in model compression techniques will allow for smaller, more efficient models to be deployed on resource-constrained devices, expanding the reach of open-source AI to mobile and embedded systems. This could unlock new applications in areas like healthcare, environmental monitoring, and smart agriculture.

Predictions about the Future of Community Involvement in Open-Source AI Projects

Community involvement will be the lifeblood of open-source AI. We anticipate a growing diversification of contributors, with increased participation from researchers, developers, and even end-users. This will lead to a more inclusive and collaborative environment, fostering innovation and ensuring that open-source AI reflects the needs and perspectives of a broader range of stakeholders. The development of more robust and user-friendly tools and platforms will further facilitate community participation, lowering the barrier to entry for new contributors.

Successful projects will be those that effectively leverage the collective intelligence of their communities, fostering a culture of transparency, open communication, and mutual support. Think of the success of projects like Linux and Apache, which exemplify the power of collaborative development.

Potential Challenges and Opportunities for Open-Source AI in the Coming Years

The growth of open-source AI is not without its challenges. Addressing ethical concerns, such as bias mitigation and responsible AI development, will be paramount. Ensuring the security and robustness of open-source AI models against malicious attacks will also be critical. However, these challenges also present significant opportunities. Open-source AI offers the potential to democratize access to powerful AI technologies, leveling the playing field for smaller organizations and individuals.

It also fosters transparency and accountability, enabling greater scrutiny of AI models and their potential impact on society. By actively addressing these challenges, the open-source community can solidify its position as a leader in the responsible and ethical development of AI.

Timeline of Key Milestones and Anticipated Developments in Open-Source AI (Next Decade)

| Year | Milestone/Development | Description | Impact |

|---|---|---|---|

| 2024-2025 | Maturation of Modular AI Models | Increased adoption of smaller, specialized models tailored for specific tasks. | Improved efficiency and accessibility. |

| 2026-2027 | Advancements in Federated Learning | Wider adoption of federated learning techniques for privacy-preserving AI development. | Enhanced data privacy and collaboration. |

| 2028-2029 | Rise of Open-Source AI Hardware | Development of open-source hardware platforms optimized for AI training and inference. | Increased accessibility and affordability of AI development. |

| 2030-2034 | Widespread Adoption of Explainable AI (XAI) | Integration of XAI techniques into open-source models, improving transparency and trust. | Increased accountability and responsible AI development. |

The debate surrounding open-source AI is far from over. It’s a dynamic and evolving conversation, shaped by technological advancements, ethical considerations, and the shifting power dynamics in the global AI landscape. While the definition of “open-source AI” remains contested, the ongoing discussion is crucial. It forces us to confront fundamental questions about the responsible development and deployment of AI, ensuring that this powerful technology benefits humanity as a whole.

The future of AI likely hinges on finding a balance between fostering innovation and mitigating potential risks, a balance that will require ongoing dialogue and collaboration among all stakeholders.