A Short History of AI From Dreams to Deep Learning

A short history of AI: It’s a journey from philosophical musings about thinking machines to the sophisticated algorithms powering our modern world. We’ll explore the highs and lows, the breakthroughs and the setbacks, the optimism and the “AI winters,” charting the incredible evolution of artificial intelligence from its nascent stages to its current, rapidly expanding influence.

From Alan Turing’s groundbreaking work to the Dartmouth Workshop that officially launched the field, we’ll witness the birth of AI programming languages, the rise and fall of expert systems, and the revolutionary impact of machine learning and deep learning. We’ll uncover the key figures, pivotal moments, and technological advancements that shaped this transformative field, painting a picture of both incredible progress and the ongoing challenges that lie ahead.

Early Days of AI (Pre-1950s)

The seeds of artificial intelligence were sown long before the term itself was coined. The quest to create machines that could mimic human thought wasn’t a sudden invention, but rather the culmination of centuries of philosophical inquiry and groundbreaking mathematical developments. This period, before the 1950s, laid the essential groundwork, establishing the fundamental concepts and tools that would later fuel the explosive growth of the field.The philosophical groundwork involved grappling with the very nature of thought and intelligence.

Ancient myths often featured artificial beings imbued with intelligence, but the serious consideration of artificial intelligence as a possibility began with thinkers like René Descartes and Gottfried Wilhelm Leibniz. Descartes’ mechanistic view of the body, suggesting it functioned like a complex machine, opened the door to the possibility of creating artificial systems capable of similar functions. Leibniz’s work on formal logic, aiming to reduce reasoning to a system of calculable symbols, provided a crucial mathematical foundation for future AI research.

These thinkers, though not directly involved in what we now call AI, laid the conceptual groundwork for exploring the possibility of artificial minds.

AI’s journey, from early theoretical concepts to today’s sophisticated algorithms, is fascinating. This rapid evolution is mirrored by the equally impressive growth of technology companies globally; for instance, check out this article on how chinese firms are growing rapidly in the global south , which highlights the expanding influence of AI development beyond traditional tech hubs. This global expansion is shaping the next chapter in AI’s history, leading to diverse applications and collaborations.

Alan Turing’s Contributions

Alan Turing’s contributions are pivotal to the history of AI. His 1950 paper, “Computing Machinery and Intelligence,” introduced the now-famous Turing Test, a benchmark for machine intelligence. The Turing Test proposes that a machine can be considered intelligent if a human evaluator cannot distinguish its responses from those of a human in a blind conversation. This paper not only provided a practical method for assessing machine intelligence but also framed the very definition of the field, shifting the focus from mimicking human behavior to exhibiting intelligent behavior, regardless of the underlying mechanism.

Beyond the Turing Test, Turing’s work on theoretical computer science, including his conceptualization of the Turing machine – a theoretical model of computation – provided a foundational mathematical framework for understanding the capabilities and limitations of computation itself, directly influencing the development of AI algorithms and architectures.

Early Attempts at “Thinking Machines”

Even before the formalization of AI as a field, several early attempts were made to create machines that exhibited some form of intelligent behavior. These early endeavors, while rudimentary by today’s standards, represent important milestones in the journey towards artificial intelligence. For example, Charles Babbage’s Analytical Engine, though never fully built during his lifetime, was a conceptual design for a programmable mechanical computer.

While not designed explicitly for AI, its architecture foreshadowed the capabilities of future computers that would be used for AI research. Similarly, early attempts at creating chess-playing machines, though limited in their ability, demonstrated a nascent effort to program complex problem-solving capabilities into mechanical devices. The limitations of these early machines were primarily due to technological constraints.

The lack of sufficient computing power and memory severely restricted the complexity of the algorithms that could be implemented. Furthermore, the understanding of how to represent knowledge and reasoning processes in a computational form was still in its infancy. These early projects, however, provided invaluable experience and insights, paving the way for the more sophisticated approaches that emerged in the later decades.

The Rise of Machine Learning (1980s-2000s): A Short History Of Ai

The 1980s marked a significant shift in the AI landscape, moving away from the symbolic reasoning approaches that dominated earlier decades and towards a data-driven paradigm: machine learning. This change was fueled by several factors, including the increasing availability of computational power and the realization that tackling complex problems often required learning from data rather than relying on explicitly programmed rules.

From Alan Turing’s groundbreaking work to today’s sophisticated algorithms, the history of AI is a fascinating journey. It’s amazing how far we’ve come, and it makes you think about the potential impact on seemingly unrelated fields, like the approach to complex social issues. For example, I was reading an article about tony perkins solution to gun violence isnt what you think says former police officer , and it got me thinking about how AI could potentially analyze crime data to inform policy.

The possibilities are truly mind-boggling, as AI continues its rapid evolution.

The focus shifted from crafting intricate expert systems to developing algorithms that could learn patterns and make predictions from large datasets.The development of efficient algorithms was crucial to this shift. Backpropagation, a method for training artificial neural networks, played a pivotal role. This algorithm allowed for the effective adjustment of the network’s internal parameters, enabling it to learn complex mappings between inputs and outputs.

While the backpropagation algorithm itself had been known since the 1960s, its practical application was significantly hampered by limited computing power. The increased computational capabilities of the 1980s finally made it a viable tool for training reasonably sized neural networks. This led to a renewed interest in neural networks and spurred advancements in other areas of machine learning.

Key Machine Learning Algorithms

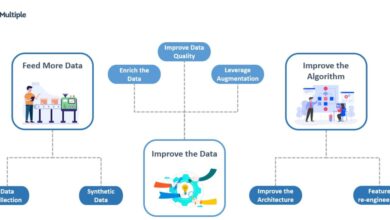

The 1980s and 90s witnessed the development and refinement of numerous machine learning algorithms. These algorithms provided different approaches to learning from data, each with its own strengths and weaknesses. The choice of algorithm depended heavily on the nature of the problem and the available data.

Machine Learning Paradigms

Machine learning is broadly categorized into several paradigms, each with its unique approach to learning from data. Supervised learning involves training a model on a labeled dataset, where each data point is associated with a known outcome. The model learns to map inputs to outputs based on this labeled data. Unsupervised learning, in contrast, deals with unlabeled data, where the model aims to discover underlying patterns and structures in the data without explicit guidance.

Reinforcement learning focuses on training agents to interact with an environment and learn optimal actions through trial and error, guided by rewards and penalties. Each paradigm offers distinct advantages and is suitable for different types of problems.

AI’s journey, from early theoretical musings to today’s complex algorithms, has been fascinating. Understanding its evolution requires looking at the broader context of societal shifts, like the intense debate surrounding policy proposals; for example, the thorough analysis presented in this article, daniel turner kent lassman green new deal is no deal at all we know because we studied it , highlights how even seemingly straightforward plans require deep scrutiny.

This kind of rigorous evaluation is crucial as AI continues to shape our future.

Comparison of Machine Learning Algorithms

The following table compares three major machine learning algorithms: Decision Trees, Support Vector Machines, and Naive Bayes.

| Algorithm | Description | Strengths | Weaknesses |

|---|---|---|---|

| Decision Tree | A tree-like model that uses a series of decisions to classify data points. | Easy to understand and interpret; can handle both numerical and categorical data; relatively fast to train. | Prone to overfitting; can be unstable (small changes in data can lead to large changes in the tree structure). |

| Support Vector Machine (SVM) | A model that finds the optimal hyperplane to separate data points into different classes. | Effective in high-dimensional spaces; relatively memory efficient; versatile due to the use of kernel functions. | Can be computationally expensive for large datasets; the choice of kernel function can significantly impact performance; less interpretable than decision trees. |

| Naive Bayes | A probabilistic classifier based on Bayes’ theorem with strong (naive) independence assumptions between features. | Simple and efficient; works well with high-dimensional data; relatively insensitive to irrelevant features. | The independence assumption is often violated in real-world datasets; performance can be affected by correlated features. |

The Deep Learning Revolution (2010s-Present)

The 2010s witnessed an explosive growth in the field of artificial intelligence, largely driven by advancements in deep learning. This subfield of machine learning utilizes artificial neural networks with multiple layers (hence “deep”) to analyze data and extract increasingly complex features. This breakthrough unlocked capabilities previously unimaginable, leading to significant impacts across numerous sectors.Deep learning’s success hinges on two key factors: the availability of massive datasets (“big data”) and a dramatic increase in computational power, particularly through the advent of powerful GPUs and cloud computing.

These advancements allowed researchers to train increasingly complex neural networks, achieving unprecedented levels of accuracy in various tasks.

Key Deep Learning Architectures

Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) emerged as two dominant architectures during this period. CNNs excel at processing grid-like data, such as images and videos, leveraging convolutional layers to identify patterns and features regardless of their location within the input. Their success is evident in image classification, object detection, and image generation tasks, powering applications like self-driving cars and medical image analysis.

RNNs, on the other hand, are designed to process sequential data like text and time series. Their recurrent connections allow them to maintain a “memory” of past inputs, enabling applications in natural language processing, machine translation, and speech recognition. The development of Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks significantly improved the ability of RNNs to handle long-range dependencies in sequences.

The Role of Big Data and Computing Power

The success of deep learning is inextricably linked to the exponential growth of available data and computing power. The sheer volume of data available—from social media posts to medical records to satellite imagery—provides the raw material for training deep learning models. However, processing this data requires immense computational resources. The development of Graphics Processing Units (GPUs), initially designed for gaming, proved exceptionally well-suited for the parallel processing demands of deep learning, significantly accelerating training times.

Cloud computing platforms further amplified this capability, providing researchers and developers access to vast computing resources on demand. For example, the training of large language models like GPT-3 required massive datasets and substantial computational power spread across numerous GPUs.

A Deep Neural Network Architecture

Imagine a deep neural network as a layered cake. Each layer performs a specific transformation on the data passed to it. Let’s consider a simple example of an image classification network:The first layer is the input layer, which receives the raw image data (e.g., pixel values). This data is then passed to the convolutional layers. These layers employ filters that scan the image, detecting features like edges, corners, and textures.

Multiple convolutional layers progressively extract more complex features from the image. A pooling layer typically follows each convolutional layer, reducing the spatial dimensions of the feature maps, making the network more robust to small variations in the input and reducing computational cost. After several convolutional and pooling layers, the processed data is flattened and passed to one or more fully connected layers.

These layers combine the extracted features to produce a higher-level representation of the image. Finally, the output layer produces the classification result (e.g., the probability that the image belongs to each category). Each layer is composed of numerous interconnected nodes (neurons), with weights and biases that are adjusted during the training process to optimize the network’s performance. The activation functions applied at each layer introduce non-linearity, enabling the network to learn complex relationships in the data.

The entire process resembles a hierarchical feature extraction process, where simpler features are combined to form more complex ones. This layered structure allows deep learning models to learn highly abstract representations of data, enabling them to achieve state-of-the-art performance in various tasks.

Current Trends and Future Directions

The rapid advancements in AI are transforming our world at an unprecedented pace. We’re moving beyond the theoretical and into a reality where AI systems are deeply integrated into various aspects of our lives, raising crucial questions about ethics, responsibility, and the future of humanity. This section explores the current trends shaping the AI landscape, examines the ethical considerations, and offers a glimpse into the potential future of this transformative technology.AI’s impact spans numerous sectors, creating both immense opportunities and significant challenges.

Understanding these trends is crucial for navigating the complex implications of this powerful technology and ensuring its responsible development and deployment.

Ethical Considerations in AI Development and Deployment

The increasing sophistication of AI systems necessitates a serious consideration of ethical implications. Bias in algorithms, for instance, can perpetuate and amplify existing societal inequalities. Facial recognition systems, trained on datasets lacking diversity, have shown higher error rates for individuals with darker skin tones. Similarly, AI-powered loan applications might discriminate against certain demographic groups if the training data reflects historical biases.

Addressing these issues requires careful data curation, algorithmic transparency, and ongoing monitoring for unintended consequences. Furthermore, questions of accountability and responsibility in cases of AI-driven errors or harm remain complex and require robust legal and regulatory frameworks. The potential for misuse of AI in surveillance, autonomous weapons systems, and the spread of misinformation also demands proactive ethical guidelines and international cooperation.

AI Applications Across Sectors

AI is rapidly becoming integral to various industries. In healthcare, AI assists in disease diagnosis, drug discovery, and personalized medicine. For example, AI algorithms can analyze medical images (like X-rays and MRIs) with a speed and accuracy exceeding that of human experts in certain cases, aiding in early detection of cancers and other critical conditions. In finance, AI powers fraud detection systems, algorithmic trading, and risk assessment models.

Sophisticated algorithms can identify unusual transaction patterns indicative of fraudulent activity, significantly improving security and reducing financial losses. The transportation sector witnesses the rise of autonomous vehicles, relying heavily on AI for navigation, object recognition, and decision-making. Self-driving cars promise to improve road safety, reduce traffic congestion, and enhance accessibility for individuals with limited mobility. However, challenges related to safety, regulation, and ethical considerations (such as accident responsibility) still need to be addressed.

Potential Future Developments in AI

The future of AI holds immense potential. A significant area of focus will be the development of more robust and reliable AI systems capable of handling uncertainty and unexpected situations.

- Explainable AI (XAI): Increased focus on creating AI systems that can explain their reasoning and decision-making processes, fostering trust and accountability.

- General-purpose AI: Progress towards creating AI systems with human-level intelligence and adaptability, capable of performing a wide range of tasks.

- Human-AI Collaboration: Developing AI systems that work effectively alongside humans, augmenting human capabilities rather than replacing them.

- AI for Scientific Discovery: Utilizing AI to accelerate scientific breakthroughs in areas such as materials science, drug discovery, and climate modeling.

- AI-driven Automation: Further automation of various tasks across industries, leading to increased efficiency and productivity.

Challenges and Opportunities in Creating Robust, Explainable, and Ethical AI Systems, A short history of ai

Creating truly robust, explainable, and ethical AI systems presents significant challenges. The complexity of AI algorithms often makes it difficult to understand their decision-making processes, leading to a “black box” problem. This lack of transparency can hinder trust and accountability. Furthermore, ensuring fairness and mitigating bias in AI systems requires careful consideration of data collection, algorithm design, and ongoing monitoring.

However, these challenges also present significant opportunities. The development of explainable AI (XAI) techniques, for instance, aims to address the “black box” problem by making AI decision-making more transparent and understandable. This will foster trust and enable better oversight and control. Moreover, investing in research on fairness, accountability, and transparency (FAT) in AI is crucial for building ethical and responsible AI systems.

By addressing these challenges proactively, we can harness the transformative potential of AI while mitigating its risks and ensuring a future where AI benefits all of humanity.

The story of AI is far from over. While we’ve made astonishing progress, the journey continues, with ethical considerations, explainable AI, and the pursuit of even more robust and intelligent systems at the forefront. From self-driving cars to medical diagnoses, AI’s influence is undeniable and only set to grow exponentially. Understanding its history allows us to better navigate its future, shaping a technological landscape that benefits all of humanity.