AI Chip Architectures Software Embrace

AI has propelled chip architecture towards a tighter bond with software, revolutionizing how we design and build computer chips. Forget the old days of purely hardware-focused designs; we’re now in an era of co-design, where software and hardware are intricately intertwined from the very beginning. This shift isn’t just incremental; it’s a fundamental change driven by the insatiable demands of AI and the incredible advancements in compiler technology and high-level synthesis tools.

The result? Chips that are faster, more energy-efficient, and far more adaptable to the ever-evolving software landscape. But what does this actually mean for developers, and what challenges lie ahead?

This tighter integration is impacting everything from software development methodologies to the very tools we use to design chips. We’ll explore how domain-specific languages (DSLs) are emerging as key players, allowing developers to tailor software directly to the specific hardware architecture. We’ll also dive into the fascinating world of hardware-software co-design techniques and the powerful EDA tools that make it all possible.

Get ready for a deep dive into the heart of this exciting technological revolution!

The Evolution of Chip Architecture

The design of computer chips has undergone a dramatic transformation, shifting from a primarily hardware-centric approach to a more holistic co-design methodology. This evolution is largely driven by the increasing complexity of software and the relentless demand for higher performance and lower energy consumption in modern computing systems. Early chip design focused heavily on optimizing hardware performance, often at the expense of software efficiency.

The rise of AI, however, has necessitated a tighter integration between hardware and software, demanding a new paradigm.

The Shift from Hardware-Centric to Co-design

Historically, chip architects focused primarily on optimizing hardware components like transistors, logic gates, and memory structures. Software was considered a secondary concern, often adapting to the limitations of the underlying hardware. This hardware-centric approach led to efficient hardware but sometimes resulted in software that was less optimized or harder to develop for specific hardware architectures. The advent of sophisticated software tools and the increasing complexity of software applications, particularly in areas like AI, have fundamentally changed this perspective.

Now, chip architects and software engineers collaborate closely throughout the design process, considering both hardware and software constraints simultaneously. This co-design approach allows for the creation of chips that are highly optimized for specific software tasks, resulting in improved performance and energy efficiency.

Key Technological Advancements

Several key technological advancements have enabled this transition to co-design. Advancements in compiler technology have become crucial. Modern compilers are capable of performing sophisticated optimizations, generating highly efficient machine code tailored to the specific hardware architecture. High-level synthesis (HLS) tools have also played a significant role. HLS allows designers to describe hardware functionality using high-level programming languages like C++ or SystemVerilog, automating much of the process of translating this description into a hardware implementation.

This significantly reduces the design time and allows for easier exploration of different architectural trade-offs. Furthermore, the development of specialized hardware accelerators for specific AI algorithms, like Tensor Processing Units (TPUs), demonstrates the growing importance of software-aware hardware design. These accelerators are designed from the ground up to efficiently execute specific AI workloads, leveraging knowledge of the algorithms and data structures involved.

Performance and Energy Efficiency Comparison

The following table compares the performance characteristics and energy efficiency of traditional hardware-centric chips versus those designed with a tighter software-hardware bond:

| Architecture | Performance | Energy Efficiency | Development Time |

|---|---|---|---|

| Hardware-Centric | Generally good for general-purpose tasks, but may lack optimization for specific applications. | Can be less efficient for specialized tasks, leading to higher power consumption. | Relatively faster for simple designs, but complex designs can be time-consuming. |

| Co-designed (Software-Aware) | Highly optimized for specific applications, leading to significantly higher performance in target domains. For example, AI accelerators demonstrate significant speedups compared to general-purpose CPUs for AI workloads. | Improved energy efficiency due to tailored hardware and optimized software. AI accelerators often demonstrate lower power consumption per operation compared to general-purpose CPUs for AI tasks. | Longer development time due to the iterative process of hardware-software co-optimization. However, the long-term gains in performance and energy efficiency often outweigh the initial investment. |

Impact on Software Development Methodologies: Ai Has Propelled Chip Architecture Towards A Tighter Bond With Software

The increasingly tight integration of hardware and software, driven by advancements in AI and chip design, is fundamentally reshaping software development methodologies. We’re moving beyond the traditional model of writing software for generic hardware towards a more collaborative and specialized approach, where hardware and software are co-designed from the outset to maximize performance and efficiency. This shift necessitates new tools, processes, and ways of thinking about software development.This closer relationship between hardware and software necessitates a paradigm shift in software development workflows.

Traditional sequential development, where hardware is designed first and software is adapted later, is becoming less efficient. Instead, concurrent development, with hardware and software engineers working in parallel and iteratively, is gaining prominence. This iterative approach allows for early feedback and optimization, leading to improved performance and reduced development time. This co-design approach requires increased communication and collaboration between hardware and software teams, often involving specialized tools and platforms for seamless information exchange and version control.

AI’s influence on chip design is fascinating; it’s pushing for a much closer relationship between hardware and software. This reminds me of something I read recently about workplace dynamics – the article, ” should you be nice at work “, got me thinking about collaboration. Just as smooth software-hardware integration needs teamwork, so does successful AI development, demanding a similarly collaborative chip architecture.

Domain-Specific Languages and Hardware Optimization

Domain-specific languages (DSLs) are playing a crucial role in bridging the gap between software and hardware. DSLs are programming languages tailored to a specific problem domain, allowing developers to express algorithms and computations in a way that’s naturally aligned with the underlying hardware architecture. Instead of writing generic code that might not fully utilize the capabilities of the hardware, developers can use DSLs to express their intentions in a manner that directly maps to the hardware’s strengths, resulting in significantly improved performance.

For example, a DSL designed for a specific AI accelerator might allow developers to express complex neural network computations in a highly efficient and optimized manner, leveraging the accelerator’s specialized features and minimizing overhead. This direct mapping improves performance and reduces the need for extensive low-level optimizations.

Software Development Tools and Frameworks for Co-designed Hardware, Ai has propelled chip architecture towards a tighter bond with software

Several software development tools and frameworks are emerging to support the co-design approach. These tools often provide abstractions that hide the complexities of the underlying hardware, allowing developers to focus on the higher-level aspects of their applications. For instance, some frameworks offer high-level APIs that automatically optimize code for specific hardware accelerators, freeing developers from the burden of manual optimization.

Others provide sophisticated simulation and profiling tools that allow developers to accurately assess the performance of their software on the target hardware before deployment. Examples include specialized compilers that generate highly optimized code for specific architectures, debuggers that provide insights into hardware-software interactions, and frameworks that simplify the development of parallel and distributed applications for multi-core processors and specialized accelerators.

These tools aim to streamline the development process and reduce the time and effort required to create high-performance applications for co-designed hardware.

Hardware-Software Co-design Techniques and Tools

The increasing complexity of modern systems necessitates a holistic approach to design, moving beyond the traditional siloed development of hardware and software. Hardware-software co-design offers a powerful solution, enabling optimized integration and performance. This approach emphasizes concurrent development and iterative refinement, ensuring that hardware and software components are tailored to each other from the outset. This results in systems that are more efficient, reliable, and cost-effective.Hardware-software co-design relies on a suite of techniques and tools to manage the inherent complexities.

These techniques aim to optimize resource allocation, communication pathways, and overall system performance. Effective co-design requires careful planning, robust tools, and a deep understanding of both hardware and software architectures.

AI’s influence on chip design is fascinating; we’re seeing a real shift towards co-designed hardware and software. This reminds me of a recent news story I read about the massive cost of providing smartphones to migrants – check out this article detailing how ICE shelled out $89.5 million for this initiative: ice issues smartphones to 255602 illegal border crossers cost is 89 5 million a year.

The scale of that project highlights how software and hardware integration, even on a simple device like a smartphone, impacts massive budgets, much like the intricate AI-driven chip designs we’re discussing.

Hardware-Software Partitioning

Hardware-software partitioning is a critical initial step in co-design. It involves deciding which functionalities will be implemented in hardware (for speed and efficiency) and which in software (for flexibility and adaptability). This decision depends on factors like performance requirements, cost constraints, power consumption targets, and the availability of suitable hardware components. For example, a computationally intensive image processing algorithm might be partially implemented in hardware (e.g., using a dedicated FPGA) for speed, while the user interface and control logic remain in software.

This partitioning process often involves exploring various trade-offs and employing optimization algorithms to find the best balance between hardware and software resources.

Communication Optimization

Once the partitioning is complete, optimizing communication between the hardware and software components is crucial. Inefficient communication can severely bottleneck performance. Techniques like memory mapping, direct memory access (DMA), and specialized communication protocols are used to minimize latency and maximize throughput. For instance, DMA allows the hardware to directly access and transfer data to/from memory without CPU intervention, significantly reducing the software overhead.

Careful consideration of data formats and communication interfaces also plays a key role in achieving optimal communication efficiency.

Electronic Design Automation (EDA) Tools

Several EDA tools support hardware-software co-design, offering a range of functionalities to assist in the development process. These tools typically provide integrated environments for hardware description language (HDL) coding, software development, simulation, and verification. Examples include:

- Mentor Graphics QuestaSim: Offers co-simulation capabilities, allowing for the simultaneous simulation of hardware and software components to verify their interaction.

- Synopsys VCS: Provides a high-performance simulator used for verifying the hardware design, often integrated with software verification tools.

- Cadence Incisive: Another powerful simulator supporting hardware-software co-verification and debugging.

These tools often incorporate features for automatic code generation, hardware-software interface synthesis, and performance analysis, significantly accelerating the development process.

Hypothetical Hardware-Software Co-design Workflow

A typical hardware-software co-design workflow might follow these stages:

- Requirements Specification: Define the system’s functionality, performance targets, and constraints.

- Architectural Design: Develop a high-level architecture outlining the hardware and software components and their interactions.

- Hardware-Software Partitioning: Decide which functionalities are implemented in hardware and software, considering performance, cost, and power consumption.

- Hardware Design and Implementation: Design and implement the hardware components using HDLs (e.g., VHDL, Verilog) and synthesize them for target hardware (e.g., FPGA, ASIC).

- Software Development: Develop the software components, considering the hardware interface and communication protocols.

- Co-simulation and Verification: Simulate and verify the interaction between hardware and software using EDA tools.

- Integration and Testing: Integrate the hardware and software components and perform thorough system testing.

- Deployment and Optimization: Deploy the system and perform further optimization based on real-world performance data.

This workflow emphasizes iterative refinement, allowing for adjustments and improvements throughout the development process. The choice of specific tools and techniques will depend on the project’s requirements and constraints.

AI’s impact on chip design is fascinating; it’s pushing for a much closer integration of hardware and software, creating incredibly efficient systems. Think about how this tailored approach mirrors the intimacy of political discourse, as explored in this insightful article on how podcasts came to rule America’s campaign conversation: how podcasts came to rule americas campaign conversation.

Just as podcasts foster direct engagement, AI is streamlining the communication between software and the chip architecture itself, leading to powerful new possibilities.

Specific Examples of AI’s Influence on Chip Architecture

The rise of artificial intelligence has fundamentally reshaped the landscape of chip design, pushing for a closer integration between hardware and software than ever before. This isn’t just about faster processors; it’s about creating architectures specifically tailored to the unique computational demands of AI algorithms. This tighter coupling allows for significant performance improvements and energy efficiency gains, previously unimaginable with traditional general-purpose processors.AI accelerators, like Google’s Tensor Processing Units (TPUs), are prime examples of this paradigm shift.

These specialized chips are designed from the ground up to efficiently handle the matrix multiplications and other operations at the heart of machine learning algorithms. Their architecture is optimized for specific AI workloads, resulting in dramatically faster processing speeds compared to general-purpose CPUs or GPUs for these tasks. This optimization extends beyond the hardware itself; the software running on these chips is also deeply intertwined with the hardware’s design, maximizing performance and minimizing latency.

AI Accelerators and the Hardware-Software Symbiosis

TPUs, for instance, showcase the powerful synergy between hardware and software. Their architecture, featuring specialized matrix multiplication units and high-bandwidth memory systems, is intrinsically linked to the software frameworks (like TensorFlow) designed to run on them. The software is optimized to exploit the TPU’s unique capabilities, taking full advantage of its parallel processing power and minimizing data movement between memory and processing units.

This close collaboration between hardware and software engineers is crucial for achieving the impressive performance gains seen in AI applications running on TPUs. The design isn’t simply a hardware improvement; it’s a co-designed system where the hardware is built with the software in mind, and the software is written to exploit the hardware’s strengths.

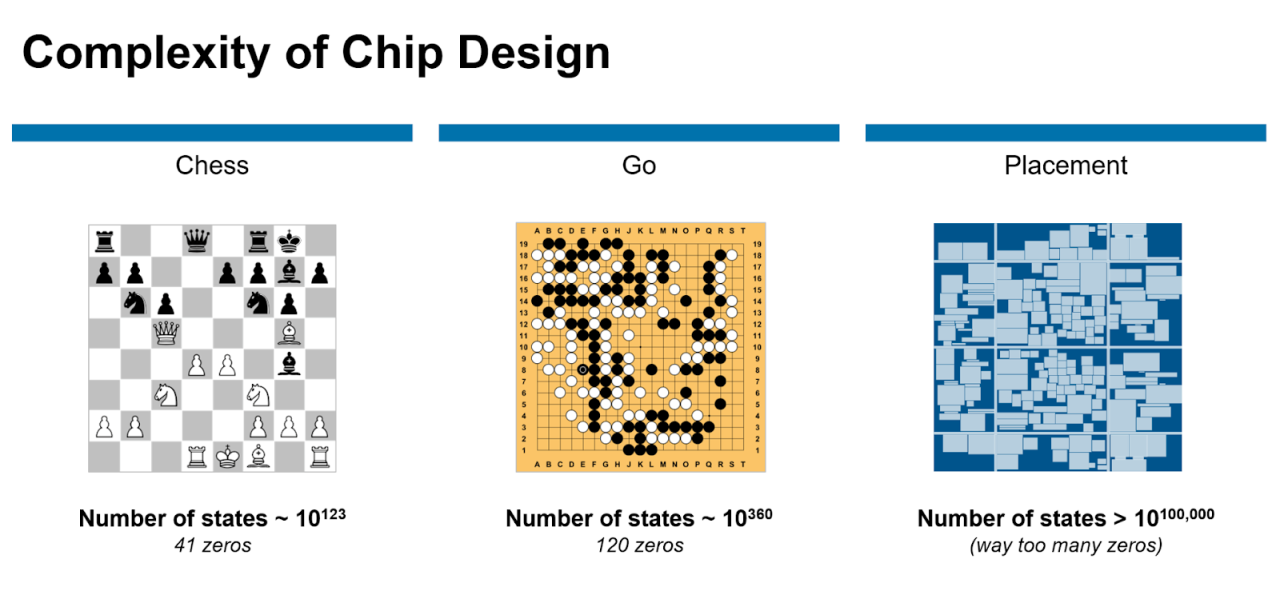

Machine Learning in Chip Design and Manufacturing

Machine learning is not only accelerating AI applications but also revolutionizing the design and manufacturing of the chips themselves. Algorithms are now used to optimize various aspects of the chip design process, from initial layout and placement of transistors to predicting yield and identifying potential manufacturing defects. For example, ML models can analyze vast datasets of design parameters and simulation results to predict the performance and power consumption of different design choices, enabling engineers to make more informed decisions and significantly reduce design time.

Similarly, ML algorithms are used in predictive maintenance of manufacturing equipment, leading to improved yield and reduced downtime. This application of AI in the chip manufacturing process itself is a clear demonstration of the cyclical and reinforcing nature of the AI revolution.

Architectural Comparison: General-Purpose vs. AI-Specific Processors

The differences between general-purpose processors and AI-specific processors are stark, reflecting the distinct software optimization strategies required for each.

| Processor Type | Architectural Features | Software Optimization Strategies | Target Applications |

|---|---|---|---|

| General-Purpose Processor (e.g., x86 CPU) | General-purpose instruction set, flexible architecture, cache hierarchy | Compiler optimizations, parallelization techniques, memory management | Wide range of applications, including operating systems, office productivity, gaming |

| AI-Specific Processor (e.g., TPU) | Specialized matrix multiplication units, high-bandwidth memory, custom instruction sets optimized for AI operations | Framework-specific optimizations, dataflow programming, low-level hardware control | Machine learning tasks such as image recognition, natural language processing, and deep learning |

Challenges and Future Directions

The increasingly tight coupling between hardware and software in AI-accelerated chip architectures presents both exciting opportunities and significant hurdles. While this co-design approach promises unprecedented performance gains, it also introduces complexities in design, verification, and deployment that demand innovative solutions. The future of chip architecture hinges on our ability to address these challenges effectively, leveraging advancements in AI and software to create truly transformative technologies.The development of these tightly integrated systems is far more complex than designing hardware and software in isolation.

The intricate interplay between hardware and software requires specialized tools and methodologies for co-verification and optimization. Traditional verification techniques often prove inadequate, leading to longer development cycles and increased costs. Furthermore, the specialized nature of these architectures can limit portability and hinder the adoption of standardized software development practices. Debugging becomes a significantly more challenging task, requiring sophisticated debugging tools that can bridge the hardware-software divide.

The need for highly specialized expertise further exacerbates these difficulties.

Increased Design Complexity and Verification Challenges

The inherent complexity of hardware-software co-design stems from the numerous interactions and dependencies between the hardware and software components. For example, consider the design of a specialized AI accelerator. The architecture needs to be optimized for specific neural network operations, requiring close collaboration between hardware and software engineers. Changes in the software algorithms might necessitate adjustments to the hardware architecture, and vice-versa, creating a complex iterative design process.

Verification becomes exponentially harder, requiring extensive simulation and testing to ensure the correctness and reliability of the entire system. Formal verification methods, while promising, are often computationally expensive and may not scale well for highly complex systems. The need for comprehensive testing and validation adds significant time and cost to the development process. A real-world example is the development of custom ASICs for high-performance computing, where the tight integration of hardware and software necessitates rigorous verification to guarantee reliability and performance in demanding applications like self-driving cars or large-scale scientific simulations.

Future Trends in Chip Architecture Driven by AI and Software

The future of chip architecture will be shaped by several key trends. First, we can expect to see a continued increase in the specialization of hardware architectures. AI accelerators will become increasingly sophisticated, optimized for specific neural network types and operations. This specialization will lead to higher performance and energy efficiency but also increase the complexity of the hardware-software interface.

Second, software will play an increasingly crucial role in managing and optimizing these specialized hardware architectures. Advanced compilers and runtime systems will be needed to efficiently map AI workloads onto the hardware, maximizing performance and minimizing energy consumption. Third, new programming models and tools will be essential to simplify the development process. These tools will need to abstract away much of the hardware-specific details, allowing developers to focus on the AI algorithms rather than low-level hardware interactions.

The rise of neuromorphic computing, which mimics the structure and function of the human brain, represents a significant paradigm shift, requiring novel software and hardware co-design approaches. Companies like Intel and IBM are actively investing in this area, demonstrating the potential of this technology for future AI applications.

Anticipated Evolution of Hardware-Software Co-design

Imagine a graph depicting the evolution of hardware-software co-design over the next decade. The X-axis represents time, starting from the present and extending to 2033. The Y-axis represents the level of hardware-software integration, ranging from loosely coupled (at the bottom) to tightly coupled (at the top). The graph would show a steady upward trend, indicating increasing integration over time.

Initially, the line would rise gradually, reflecting the current state of co-design. However, around the mid-point (2026-2028), the line would show a steeper incline, reflecting the acceleration of advancements in AI and the increasing demand for highly optimized AI systems. The curve would eventually plateau towards the top, suggesting that while integration will continue, there will be limitations to how tightly hardware and software can be coupled.

This plateau represents a point of equilibrium, where the benefits of further integration are outweighed by the associated costs and complexities. Specific points along the graph could represent milestones like the widespread adoption of new programming paradigms or the emergence of revolutionary hardware architectures like quantum computing.

The convergence of AI and chip architecture is rewriting the rules of computing. The tighter bond between software and hardware, once a distant dream, is now a powerful reality, thanks to AI-driven optimization and innovative co-design techniques. While challenges remain – increased complexity and verification being key among them – the future looks incredibly bright. We can expect even more sophisticated and energy-efficient chips, tailored to specific AI workloads and beyond.

This isn’t just about faster processing; it’s about unlocking entirely new possibilities in computation, driving innovation across industries and fundamentally changing how we interact with technology.