Why Regulators Are Focusing on AIs Immediate Risks

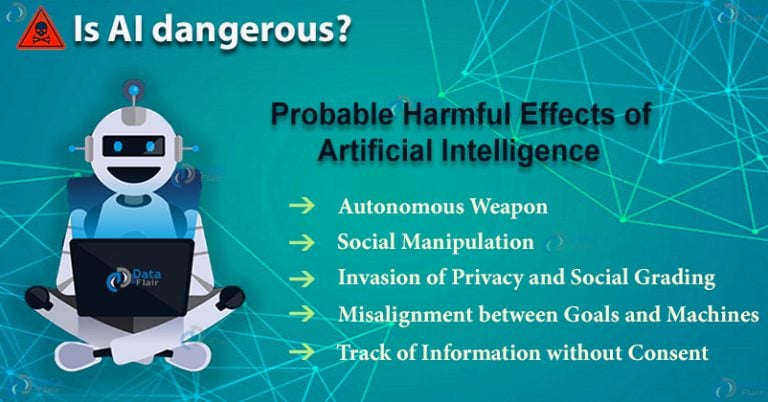

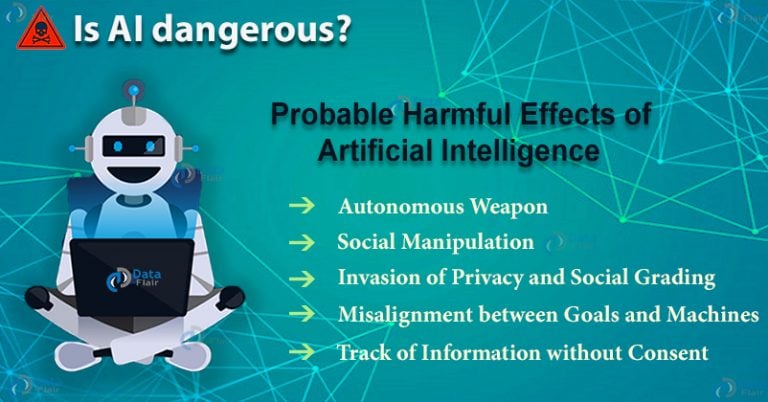

Why regulators are focusing on ais immediate risks – Why Regulators Are Focusing on AI’s Immediate Risks? It’s a question buzzing louder every day. The rapid advancement of artificial intelligence has brought incredible potential, but also a whirlwind of ethical dilemmas and practical dangers. From biased algorithms perpetuating societal inequalities to the very real threat of autonomous systems malfunctioning, the risks are immediate and demand urgent attention.

This isn’t about slowing innovation; it’s about ensuring AI benefits humanity safely and fairly.

The current regulatory focus stems from a growing understanding that unchecked AI development could lead to significant harm. We’re seeing examples across various sectors – from discriminatory loan applications powered by biased algorithms to the potential for deepfakes to destabilize democracies. Regulators are scrambling to keep pace, trying to create frameworks that both foster innovation and protect us from the potential downsides.

This is a complex balancing act, but one absolutely crucial for the future.

Data Privacy and Security Concerns

The rapid advancement of AI systems brings with it significant concerns regarding data privacy and security. The vast amounts of data required to train and operate these systems create vulnerabilities that necessitate a proactive and robust regulatory approach. This isn’t just about theoretical risks; real-world breaches demonstrate the tangible consequences of inadequate data protection in the AI landscape.AI systems often collect, store, and process personal data on an unprecedented scale.

This data is used for various purposes, from training algorithms to personalizing user experiences. However, this extensive data handling raises significant concerns about compliance with existing data privacy regulations like GDPR and CCPA. The potential for misuse, unauthorized access, and unintended disclosure of sensitive information is substantial.

Data Breaches Related to AI Systems and Their Consequences

Several high-profile data breaches have highlighted the vulnerabilities of AI systems. For example, a breach involving a facial recognition system could lead to the unauthorized identification and tracking of individuals, potentially violating their privacy and leading to identity theft or other serious consequences. Similarly, a breach affecting a healthcare AI system could expose sensitive patient data, leading to significant reputational damage and legal repercussions for the organization involved.

The consequences can range from financial penalties and legal action to irreparable damage to public trust. These breaches underscore the need for robust security measures and rigorous oversight.

Regulators are laser-focused on AI’s immediate risks because of the potential for rapid, widespread harm. Think about the speed at which misinformation spreads, and consider that this is amplified by AI. This urgency is reminiscent of the need for reform in Japan’s business sector, as highlighted in this insightful article, japans sleepy companies still need more reform , where sluggish adaptation to change mirrors the potential for AI to cause unforeseen damage if left unchecked.

Ultimately, both situations highlight the critical need for proactive regulation and adaptation to prevent future crises.

Technical and Legal Challenges in Ensuring Data Security in AI Applications

Ensuring data security in AI applications presents both technical and legal challenges. Technically, securing vast datasets used for training AI models requires advanced encryption techniques, robust access controls, and continuous monitoring for vulnerabilities. The decentralized nature of many AI systems, particularly those utilizing blockchain or distributed ledger technologies, adds another layer of complexity. Legally, the ambiguity surrounding data ownership and liability in AI systems creates challenges in assigning responsibility in case of a breach.

Determining who is accountable—the AI developer, the data provider, or the system user—can be complex and often requires navigating a patchwork of existing regulations. The evolving nature of AI technology also means that legal frameworks often struggle to keep pace with the latest developments.

Best Practices for Protecting Sensitive Data Used in AI Development and Deployment

Protecting sensitive data in AI requires a multi-faceted approach.A checklist of best practices should include:

- Data Minimization: Collect only the data necessary for the specific AI application.

- Data Anonymization and Pseudonymization: Employ techniques to remove or mask personally identifiable information whenever possible.

- Strong Encryption: Utilize robust encryption methods both in transit and at rest to protect data from unauthorized access.

- Access Control: Implement strict access controls to limit access to sensitive data to authorized personnel only.

- Regular Security Audits: Conduct regular security audits and penetration testing to identify and address vulnerabilities.

- Incident Response Plan: Develop and regularly test an incident response plan to effectively manage data breaches.

- Compliance with Data Privacy Regulations: Ensure compliance with all relevant data privacy regulations, such as GDPR and CCPA.

- Transparency and Accountability: Maintain transparency regarding data collection, usage, and security practices.

Implementing these best practices is crucial for mitigating the risks associated with AI and building trust in this rapidly evolving technology. Failure to do so can lead to severe consequences, including hefty fines, reputational damage, and erosion of public trust.

Job Displacement and Economic Inequality

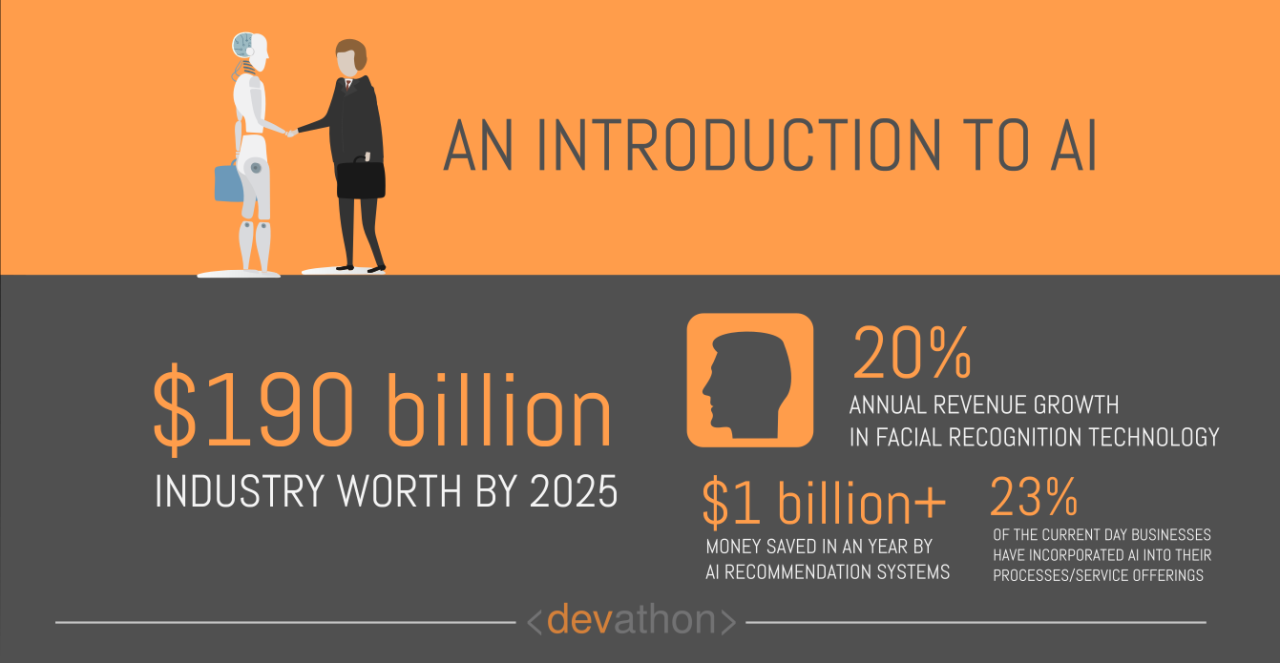

The rapid advancement of artificial intelligence (AI) presents a double-edged sword: while offering immense potential for economic growth and societal improvement, it also poses significant challenges, particularly concerning job displacement and the exacerbation of economic inequality. Understanding the sectors most at risk, analyzing the potential economic impact, and developing effective mitigation strategies are crucial for navigating this transformative period responsibly.AI’s transformative power is reshaping the economic landscape at an unprecedented pace.

While some predict a net increase in jobs due to AI-driven innovation, the transition will undoubtedly be disruptive, leaving many workers vulnerable to displacement and requiring significant adaptation. The economic benefits of AI are substantial, potentially boosting productivity, creating new industries, and improving the efficiency of existing ones. However, the costs associated with job losses, retraining initiatives, and the widening income gap must be carefully considered.

Sectors Most Vulnerable to AI Automation

Several sectors are particularly susceptible to automation due to the nature of their tasks. These include manufacturing, transportation (particularly trucking and delivery services), customer service (through the use of chatbots and automated phone systems), data entry, and basic accounting functions. The repetitive and rule-based nature of many jobs within these sectors makes them ideal candidates for AI-powered automation.

For example, the rise of self-driving vehicles poses a significant threat to professional drivers, while automated systems are already handling many customer service inquiries with increasing efficiency. The impact on employment in these sectors will vary depending on the pace of technological adoption and the availability of retraining and upskilling opportunities.

Comparative Analysis of Economic Benefits and Costs

The potential economic benefits of widespread AI adoption are substantial. Increased productivity across various industries, the creation of entirely new markets and industries, and the improvement of existing services all contribute to significant economic growth. However, the costs associated with job displacement are equally significant. Retraining programs, social safety nets, and potential increases in social unrest are all potential economic burdens.

The net economic impact will depend on several factors, including the speed of AI adoption, the effectiveness of government policies to mitigate negative consequences, and the ability of the workforce to adapt to the changing job market. A recent study by the McKinsey Global Institute, for example, suggests that while AI could displace a significant number of jobs, it could also create new ones, resulting in a net positive economic impact in the long run, but only if proactive measures are taken.

Potential Impact of AI on Various Job Roles

| Job Role | Automation Risk (High/Medium/Low) | Potential Job Displacement | Mitigation Strategies |

|---|---|---|---|

| Truck Driver | High | Significant | Retraining for logistics management, autonomous vehicle maintenance |

| Data Entry Clerk | High | Significant | Retraining for data analysis, software development |

| Customer Service Representative | Medium | Moderate | Upskilling in complex problem-solving, relationship management |

| Software Engineer | Low | Minimal | Focus on advanced AI development and implementation |

| Physician | Low | Minimal | AI-assisted diagnosis and treatment |

| Teacher | Low | Minimal | AI-powered personalized learning tools |

Mitigating the Negative Economic Consequences of AI-Driven Job Displacement, Why regulators are focusing on ais immediate risks

Mitigating the negative economic consequences of AI-driven job displacement requires a multi-pronged approach. This includes investing heavily in education and retraining programs to equip workers with the skills needed for the jobs of the future. Strengthening social safety nets, such as unemployment insurance and universal basic income, can provide a crucial buffer for those displaced by automation. Furthermore, fostering a culture of lifelong learning and encouraging adaptability within the workforce are essential.

Government policies should also focus on promoting the responsible development and deployment of AI, ensuring that its benefits are widely shared and its negative consequences are minimized. Finally, focusing on job creation in sectors less susceptible to automation and investing in infrastructure to support new industries will help to create a more resilient and inclusive economy.

Lack of Transparency and Explainability

The increasing reliance on AI systems across various sectors raises significant concerns about their lack of transparency and explainability. Understanding how these complex algorithms arrive at their decisions is crucial for building trust, ensuring accountability, and mitigating potential biases and risks. Without this understanding, we risk deploying systems that make unfair or discriminatory decisions, impacting individuals and society at large.The challenge lies in the inherent complexity of many AI models, particularly deep learning systems.

These models often consist of millions or even billions of parameters, making it difficult, if not impossible, to trace the exact path from input data to final output. This “black box” nature prevents us from understanding the reasoning behind a decision, hindering our ability to identify and correct errors or biases. For example, a loan application rejection based on a complex AI model might be impossible to understand without knowing the specific factors that contributed to the negative decision.

This lack of insight makes it difficult to address any potential discrimination or unfairness embedded within the system.

Challenges in Understanding AI Decision-Making

The opacity of complex AI models presents numerous challenges. One key difficulty stems from the sheer number of parameters and layers involved in deep learning architectures. Tracing the influence of individual data points on the final prediction is computationally expensive and often practically infeasible. Furthermore, the non-linear relationships between input and output make it challenging to interpret the model’s internal representations.

Another significant hurdle is the lack of standardized methods for explaining AI decisions. Different models employ different architectures and techniques, making it difficult to develop a universal approach to explainability.

Regulators are rightly prioritizing AI’s immediate risks – things like bias and misinformation – because the potential for harm is so significant and immediate. But thinking about complex societal challenges, I wonder if focusing solely on the short-term overlooks broader issues; for example, check out this article on whether can software help ease britains housing crisis , which highlights how technology could address major societal problems.

Ultimately, a balanced approach is needed: addressing immediate AI risks while also considering the long-term societal implications.

The Importance of Transparency and Explainability in Building Trust

Transparency and explainability are essential for building public trust in AI systems. When individuals understand how an AI system arrives at a decision, they are more likely to accept and trust its outcomes. This is particularly crucial in high-stakes domains such as healthcare, finance, and criminal justice, where AI-driven decisions can have significant consequences. Lack of transparency, on the other hand, can lead to distrust, skepticism, and even resistance to the adoption of AI technologies.

Regulators are laser-focused on AI’s immediate risks because the potential for misuse is enormous. Think about the scale of fraud – just look at how the criminals spent Covid-19 unemployment benefits on drugs and weapons, according to the Department of Labor OIG , and imagine that level of malicious intent applied to AI technologies. This highlights why preventing immediate harm, from scams to biased algorithms, is paramount for regulators right now.

For instance, if a medical diagnosis is made by an opaque AI system, patients might be hesitant to accept the diagnosis without a clear explanation of the reasoning behind it. Openness and explainability are therefore crucial for fostering acceptance and responsible AI implementation.

Techniques for Enhancing Transparency in AI Decision-Making

Several techniques aim to make AI decision-making processes more transparent. These include:

- Feature importance analysis: This involves identifying the input features that have the greatest influence on the model’s predictions. Techniques like SHAP (SHapley Additive exPlanations) provide quantitative measures of feature importance, offering insights into the factors driving the model’s decisions.

- Local Interpretable Model-agnostic Explanations (LIME): LIME approximates the complex model locally around a specific prediction, using a simpler, more interpretable model to explain the prediction. This provides a local explanation for individual instances.

- Rule extraction: This involves extracting a set of rules from the model that capture its decision-making logic. This approach is particularly suitable for models that are relatively simple and transparent.

- Visualization techniques: Visualizing the model’s internal representations, such as activation maps or decision trees, can help to understand its behavior and identify potential biases.

These methods offer different levels of explanation, with some providing more global insights into the model’s overall behavior and others focusing on local explanations for individual predictions. The choice of technique depends on the specific model and application.

A Guide for Developers: Designing AI Systems with Enhanced Explainability

Developers can proactively design AI systems with enhanced explainability features by incorporating the following steps:

- Choose appropriate models: Select models known for their inherent interpretability, such as linear regression or decision trees, whenever feasible. Avoid overly complex models unless absolutely necessary.

- Collect and preprocess data carefully: Ensure data quality and address potential biases in the data. This is crucial for creating fair and reliable AI systems.

- Integrate explainability techniques: Incorporate explainability methods during the model development process, rather than as an afterthought. Use techniques such as SHAP or LIME to gain insights into the model’s decision-making.

- Document the model and its limitations: Provide clear documentation that describes the model’s architecture, training data, and limitations. This transparency helps users understand the context and potential biases of the system.

- Develop user-friendly interfaces: Design interfaces that present explanations in a clear and understandable manner, catering to users with varying levels of technical expertise.

By prioritizing explainability from the outset, developers can build AI systems that are not only accurate and efficient but also trustworthy and accountable. This approach is essential for fostering wider acceptance and responsible use of AI technologies.

Unintended Consequences and Risks of Autonomous Systems: Why Regulators Are Focusing On Ais Immediate Risks

The rapid advancement and deployment of autonomous systems across various sectors present significant opportunities, but also introduce a range of unforeseen risks and ethical dilemmas. While the benefits are clear in areas like increased efficiency and reduced human error, the potential for unintended consequences in critical infrastructure and the inherent complexities of these systems demand careful consideration and proactive mitigation strategies.

This section explores some of the key concerns surrounding the deployment of autonomous systems.

Autonomous systems, by their very nature, operate with a degree of independence from human control. This independence, while offering advantages, also creates vulnerabilities. The potential for malfunctions, unforeseen interactions with the environment, and malicious exploitation presents significant challenges. The lack of readily available human intervention can amplify the impact of even minor errors, leading to potentially catastrophic outcomes.

Autonomous Systems in Critical Infrastructure

The deployment of autonomous systems in critical infrastructure like transportation and healthcare carries immense potential benefits, such as improved efficiency and reduced human error in tasks requiring precision and continuous operation. However, the consequences of failures in these systems can be devastating. Imagine a self-driving vehicle malfunctioning on a busy highway, or a surgical robot experiencing a software glitch during a complex operation.

The scale of potential harm in such scenarios underscores the need for rigorous testing, robust safety protocols, and comprehensive oversight mechanisms. A failure in a power grid managed by an autonomous system, for example, could cause widespread blackouts with significant economic and social consequences.

Examples of Autonomous System Malfunctions and Misuse

Several real-world incidents have highlighted the potential for malfunctions and misuse of autonomous systems. For instance, early iterations of self-driving cars have experienced difficulties navigating unexpected situations like heavy snow or construction zones. Similarly, reports of autonomous weapons systems accidentally targeting civilians underscore the risks associated with delegating lethal force to machines. The potential for malicious actors to hack or manipulate autonomous systems to cause harm is also a significant concern.

A compromised autonomous vehicle could be used to cause an accident, or a hacked medical device could inflict harm on a patient. These examples demonstrate the need for robust cybersecurity measures and careful consideration of potential vulnerabilities.

Ethical Considerations of Autonomous Weapons Systems

The development and deployment of autonomous weapons systems (AWS), also known as lethal autonomous weapons, raise profound ethical questions. The potential for these systems to make life-or-death decisions without human intervention challenges fundamental principles of accountability and human control. Concerns include the potential for unintended escalation of conflicts, the difficulty in establishing clear lines of responsibility for actions taken by AWS, and the risk of these systems falling into the wrong hands.

The absence of human judgment in the use of lethal force raises serious ethical and moral dilemmas that require international cooperation and robust regulatory frameworks. The potential for algorithmic bias in targeting decisions also needs careful consideration.

Safety Protocols and Risk Mitigation Strategies for Autonomous Systems

Mitigating the risks associated with autonomous systems requires a multi-faceted approach. This includes rigorous testing and validation procedures, the development of robust fail-safe mechanisms, and the implementation of effective cybersecurity protocols. Furthermore, clear lines of responsibility and accountability must be established, and appropriate regulatory frameworks need to be developed and enforced. Independent audits and oversight mechanisms are crucial to ensure the safety and reliability of these systems.

Continuous monitoring and data analysis can help identify potential vulnerabilities and inform the development of improved safety protocols. Furthermore, the development of ethical guidelines and standards for the design and deployment of autonomous systems is essential to ensure their responsible use. Transparency in algorithms and decision-making processes is also vital for building trust and fostering accountability.

The Spread of Misinformation and Deepfakes

The rise of artificial intelligence has ushered in an era of unprecedented technological advancement, but it has also created new avenues for the spread of misinformation and deepfakes. AI’s ability to generate realistic yet fabricated content poses a significant threat to individuals, societies, and democratic processes. This section explores the role of AI in this disturbing trend, examining its societal impact and potential mitigation strategies.AI’s power to generate and disseminate misinformation and deepfakes is deeply concerning.

Sophisticated algorithms can now create convincing fake videos, audio recordings, and images, making it increasingly difficult to distinguish between truth and falsehood. This technology is easily accessible, and its misuse can have far-reaching consequences.

AI’s Role in Generating and Disseminating Misinformation and Deepfakes

AI-powered tools, such as generative adversarial networks (GANs), can create hyperrealistic deepfakes with minimal technical expertise. These tools can be used to manipulate existing videos and images, inserting false information or creating entirely fabricated content. The ease of creation and distribution through social media platforms amplifies the reach and impact of this misinformation, making it difficult to control its spread.

Moreover, AI-driven bots and social media accounts can automatically share this content, exponentially increasing its visibility and influence. This automated dissemination can overwhelm fact-checking efforts and make it challenging to identify the source of the misinformation.

Societal Impact of AI-Generated Fake Content

The societal impact of AI-generated fake content is substantial and multifaceted. Deepfakes can be used to damage reputations, spread propaganda, incite violence, and undermine trust in institutions. For example, a deepfake video of a political leader making a controversial statement could significantly impact an election. Similarly, fake news articles generated by AI can manipulate public opinion and influence policy decisions.

The erosion of trust in media and institutions caused by the proliferation of AI-generated misinformation is a serious threat to social cohesion and democratic processes. Consider the 2016 US Presidential election, where fake news stories spread rapidly on social media, influencing voter sentiment. While not all of these were AI-generated, the potential for AI to magnify such effects is evident.

Methods for Detecting and Mitigating the Spread of AI-Generated Misinformation

Several methods are being developed to detect and mitigate the spread of AI-generated misinformation. These include advanced detection algorithms that analyze video and audio for inconsistencies, identifying subtle artifacts often present in deepfakes. Another approach involves developing AI models that can identify patterns and characteristics of fake content based on its distribution and source. Furthermore, media literacy education plays a crucial role in equipping individuals with the skills to critically evaluate information and identify potential deepfakes.

This involves teaching people to be skeptical of sensational content and to cross-reference information from multiple reliable sources. Strengthening platform policies and implementing robust content moderation strategies are also essential. This includes improved fact-checking mechanisms and the development of tools that can quickly flag potentially fake content for review.

Public Awareness Campaign: Understanding the Risks of Deepfakes and Misinformation

A comprehensive public awareness campaign is vital to combat the spread of AI-generated misinformation. This campaign should focus on educating the public about the capabilities of AI in creating realistic fake content and the potential consequences of believing and sharing such content. The campaign could utilize various media channels, including social media, television, and educational institutions. Key messages should emphasize the importance of critical thinking, verifying information from multiple sources, and reporting suspicious content.

Visual aids, such as short videos demonstrating how deepfakes are created and how to spot them, could be highly effective. Furthermore, the campaign could partner with social media platforms and fact-checking organizations to promote responsible online behavior and to combat the spread of misinformation. A clear and concise slogan, such as “Think Before You Share: Spotting Deepfakes in the Digital Age,” could help create a strong and memorable message.

The campaign could also highlight the legal and ethical implications of creating and distributing deepfakes, emphasizing the potential for severe consequences.

The push for AI regulation isn’t about stifling progress; it’s about responsible innovation. The immediate risks are real, and ignoring them would be reckless. While the solutions are complex and constantly evolving, the conversation is essential. We need open dialogue between developers, regulators, and the public to navigate this technological frontier responsibly, ensuring AI serves humanity, not the other way around.

The future of AI depends on it.