The Breakthrough AI Needs

The breakthrough AI needs aren’t just about faster computers; they’re about a fundamental shift in how we approach artificial intelligence. We’re on the cusp of something incredible, but significant hurdles remain. From conquering the limitations of current computing power to developing truly explainable AI, the path forward is paved with both challenges and exhilarating possibilities. This post dives into the key areas demanding innovation to unlock AI’s full potential.

This exploration will cover advancements needed in computational power, algorithm design, data management, explainability, ethical considerations, generalization, and human-AI collaboration. We’ll examine cutting-edge technologies like quantum computing and innovative approaches to data acquisition and bias mitigation. Ultimately, the goal is to paint a picture of what’s needed to propel AI beyond its current capabilities and into a future where it truly benefits humanity.

Computational Power Advancements

The relentless march of artificial intelligence is inextricably linked to the availability of ever-increasing computational power. While AI has made remarkable strides, its progress is frequently bottlenecked by the limitations of current hardware and energy consumption. The quest for more powerful and efficient AI systems is driving innovation across multiple fronts, promising to unlock new levels of intelligence and capability.Current limitations in computing power significantly hinder AI progress in several key areas.

Training large language models, for instance, requires massive datasets and immense computational resources, often taking weeks or even months on the most powerful supercomputers currently available. Similarly, simulating complex physical systems or developing advanced robotics necessitates far greater processing power than is currently feasible for real-time applications. Furthermore, the energy demands of training and running these large models are substantial, posing both economic and environmental challenges.

Hardware Breakthroughs Accelerating AI Development

Several promising hardware advancements hold the potential to dramatically accelerate AI development. Quantum computing, still in its nascent stages, offers the possibility of solving problems intractable for even the most powerful classical computers. Quantum computers leverage quantum phenomena like superposition and entanglement to perform calculations in a fundamentally different way, potentially enabling breakthroughs in areas like drug discovery, materials science, and optimization problems crucial to AI.

Neuromorphic chips, inspired by the structure and function of the human brain, offer another path towards more energy-efficient and powerful AI systems. These chips utilize specialized architectures to mimic the parallel processing capabilities of biological neural networks, leading to significant improvements in speed and energy efficiency for certain AI tasks. For example, Intel’s Loihi chip is a prime example of a neuromorphic chip already showing promise in various applications.

Improved Energy Efficiency in AI Systems

The energy consumption of AI systems is a major concern, both economically and environmentally. Training a single large language model can consume as much energy as a small city for a period of several weeks. Improving energy efficiency is therefore crucial for the sustainable development of AI. This can be achieved through various approaches, including the development of more energy-efficient hardware, the optimization of AI algorithms, and the use of more sustainable energy sources to power AI data centers.

For example, the adoption of techniques like model compression, which reduces the size and complexity of AI models without sacrificing performance, can significantly reduce energy consumption. Furthermore, research into more energy-efficient training methods, such as federated learning, which distributes training across multiple devices, can help alleviate the energy burden associated with training large AI models.

Comparison of Computing Architectures for AI, The breakthrough ai needs

| Architecture | Strengths | Weaknesses | Suitability for AI Tasks |

|---|---|---|---|

| Central Processing Unit (CPU) | General-purpose, versatile, mature technology | Relatively low parallel processing capabilities, energy-intensive for large AI models | Suitable for smaller AI tasks, pre-processing, and general-purpose computing |

| Graphics Processing Unit (GPU) | High parallel processing capabilities, well-suited for matrix operations | Less versatile than CPUs, specialized architecture | Excellent for deep learning, training large models, image processing |

| Tensor Processing Unit (TPU) | Highly optimized for machine learning workloads, superior performance for specific tasks | Specialized hardware, limited general-purpose capabilities | Ideal for Google’s machine learning services, large-scale model training |

| Neuromorphic Chip | Energy-efficient, parallel processing, biologically inspired | Relatively new technology, limited software support | Promising for specific AI tasks requiring low power and high efficiency, such as robotics and edge computing |

Algorithm Innovation

The relentless march of artificial intelligence necessitates a parallel evolution in the algorithms that power it. Current advancements, while impressive, are often hampered by limitations in algorithmic efficiency and robustness. The need for more sophisticated and adaptable algorithms is paramount to unlock the full potential of AI and address its inherent challenges. This means moving beyond simple improvements and exploring fundamentally new approaches.The quest for better AI algorithms isn’t just about incremental gains; it’s about breakthroughs.

Current deep learning models, for instance, are computationally expensive and often lack transparency. This necessitates a search for algorithms that are both more efficient in their use of resources and more interpretable in their decision-making processes. Novel approaches like evolutionary algorithms and advanced reinforcement learning techniques offer exciting possibilities in this regard.

Evolutionary Algorithms and Reinforcement Learning

Evolutionary algorithms, inspired by biological evolution, offer a powerful alternative to traditional gradient-based optimization methods used in training neural networks. These algorithms iteratively improve a population of candidate solutions through processes mimicking selection, mutation, and crossover. This approach can be particularly useful in navigating complex, high-dimensional search spaces where gradient-based methods struggle. For example, evolutionary algorithms have shown promise in optimizing the architecture of neural networks themselves, leading to more efficient and effective models.

Reinforcement learning, on the other hand, focuses on training agents to make optimal decisions in an environment through trial and error. Recent advancements in reinforcement learning, such as the development of more sophisticated reward functions and the use of hierarchical reinforcement learning, have enabled the training of agents capable of solving increasingly complex tasks. The combination of evolutionary algorithms and reinforcement learning offers a particularly potent approach, allowing for the automated design and optimization of reinforcement learning agents.

Addressing the “Black Box” Problem

The opacity of many complex AI models, often referred to as the “black box” problem, poses a significant challenge to their widespread adoption. Understanding how a model arrives at a particular decision is crucial for building trust and ensuring accountability. Several approaches are being explored to mitigate this issue. Explainable AI (XAI) techniques aim to provide insights into the internal workings of AI models, offering explanations for their predictions.

These techniques include methods such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations), which approximate the model’s behavior locally or globally. Another approach involves designing inherently interpretable models, such as decision trees or rule-based systems, which are easier to understand than deep neural networks. However, these simpler models often come with a trade-off in terms of accuracy and performance.

The ideal solution likely involves a combination of approaches, using XAI techniques to interpret complex models while also developing more interpretable architectures where appropriate.

A Novel Algorithm for Protein Folding Prediction

Protein folding, the process by which a protein chain adopts its three-dimensional structure, is a notoriously difficult problem with significant implications for drug discovery and disease research. Current methods are computationally expensive and often inaccurate. A novel algorithm could leverage a combination of physics-based simulations and machine learning. The algorithm could initially use physics-based simulations to generate a large number of possible protein conformations.

These conformations would then be fed into a deep learning model trained on a large dataset of known protein structures. The model would learn to predict the energy of each conformation and identify the most stable structure. Furthermore, an evolutionary algorithm could be used to optimize the architecture and parameters of the deep learning model, improving its accuracy and efficiency.

AI breakthroughs need to address real-world energy challenges, and this isn’t just about optimizing algorithms. For example, consider the strain on the power grid highlighted by this news: california extends flex alert warns drivers not to charge electric cars. Smart grids powered by AI could predict and manage peak demand, ultimately leading to more sustainable and efficient energy distribution – a crucial area where AI needs to make a significant breakthrough.

This hybrid approach, combining the accuracy of physics-based simulations with the power of machine learning and the efficiency of evolutionary optimization, could potentially lead to a significant breakthrough in protein folding prediction.

Data Acquisition and Management

The availability of high-quality, appropriately labeled data is the lifeblood of successful AI systems. Without it, even the most sophisticated algorithms will fail to deliver accurate and reliable results. This section delves into the crucial challenges and innovative solutions surrounding data acquisition and management for AI development.The challenges in obtaining sufficient high-quality, labeled data are multifaceted and often represent the biggest bottleneck in AI project timelines.

Simply put, the process is expensive, time-consuming, and prone to error.

Challenges in Obtaining High-Quality Labeled Datasets

Acquiring high-quality, labeled datasets for training AI systems presents significant hurdles. The sheer volume of data needed for effective training is often enormous, requiring substantial resources for collection and annotation. Furthermore, ensuring data quality, including accuracy and consistency of labels, is critical to avoid training models on biased or inaccurate information. The cost associated with professional data annotation can be prohibitive, particularly for complex tasks requiring specialized expertise.

Truly understanding complex systems is key to AI breakthroughs; we need AI to grasp nuances beyond simple data points. Think about the unpredictable nature of human behavior – to illustrate, consider how deeply layered the socio-political dynamics of the game are, as explained in this fascinating article on why is football in latin america so complex.

This level of contextual understanding is precisely what future AI needs to achieve true intelligence.

Finally, data privacy concerns and regulations add another layer of complexity, limiting access to certain types of data. For example, medical image datasets require strict adherence to HIPAA regulations.

Improving Data Annotation Efficiency and Reducing Bias

Several strategies can significantly improve data annotation efficiency and mitigate bias. Active learning techniques focus annotation efforts on the most informative data points, reducing the overall annotation workload. Crowdsourcing platforms can leverage the collective effort of many annotators, but require careful quality control mechanisms to ensure consistency and accuracy. Automated annotation tools, utilizing techniques like transfer learning or pre-trained models, can accelerate the process, though human review remains essential to ensure accuracy.

To mitigate bias, diverse and representative datasets are crucial. Careful consideration of sampling methods and the inclusion of diverse annotators can help minimize systematic biases in the data. Regular audits of the dataset for potential biases are also essential.

Innovative Data Acquisition Techniques

The limitations of relying solely on real-world data have driven innovation in data acquisition. Synthetic data generation offers a promising solution. By creating artificial data that mimics the characteristics of real-world data, we can augment existing datasets or even create entirely new datasets where real-world data is scarce or expensive to obtain. For example, in autonomous driving, synthetically generated images of various road conditions and traffic scenarios can be used to train models.

Federated learning is another innovative technique. It allows training AI models on decentralized data sources without directly sharing the data itself. This approach is particularly valuable when dealing with sensitive data, such as medical records or financial transactions, where data privacy is paramount. Each participant trains a local model on its own data and then shares model updates with a central server, which aggregates these updates to create a global model.

Creating a Large-Scale, Publicly Available Dataset for Handwritten Digit Recognition

A plan for creating a large-scale, publicly available dataset for handwritten digit recognition could involve several stages. First, a data collection strategy would need to be defined, perhaps utilizing online sources or crowdsourcing platforms to gather diverse samples of handwritten digits. Next, a robust annotation process would be implemented, potentially using a combination of automated tools and human experts to ensure high accuracy and consistency.

Data cleaning and quality control steps would be crucial to remove duplicates or noisy data. Finally, the dataset would be meticulously documented, including details about the data collection methods, annotation procedures, and any potential biases. This metadata is crucial for transparency and reproducibility. The dataset would then be made publicly available through a reputable repository, ensuring easy access for researchers and developers.

This dataset could significantly benefit the field of machine learning and contribute to advancements in various applications like automated postal code reading or check processing.

Explainable AI (XAI)

The rapid advancement of AI has brought incredible capabilities, but it’s also highlighted a critical need: understanding how these complex systems arrive at their decisions. Explainable AI (XAI) addresses this need, focusing on making the decision-making processes of AI models more transparent and understandable to both experts and non-experts. This is crucial not only for building trust in AI but also for ensuring fairness, accountability, and the responsible deployment of AI across various sectors.

The Importance of Transparency in AI Decision-Making

Transparency in AI is paramount for several reasons. First, it allows us to identify and correct biases embedded within the data or algorithms. Biased AI systems can perpetuate and amplify existing societal inequalities, leading to unfair or discriminatory outcomes. Second, understanding how an AI system arrived at a specific decision enables us to debug errors and improve its performance.

Third, and perhaps most importantly, transparency fosters trust. When individuals understand how an AI system works, they are more likely to accept its decisions and collaborate with it effectively. Without transparency, AI systems risk being perceived as “black boxes,” undermining their acceptance and utility.

Methods for Developing More Explainable AI Models

Developing explainable AI models requires a multifaceted approach. One strategy involves using inherently interpretable models, such as linear regression or decision trees, which are easier to understand than complex deep learning models. Another approach involves designing models that produce explanations alongside their predictions. Techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) create simplified local explanations for individual predictions, highlighting the features that most influenced the model’s decision.

Furthermore, incorporating techniques like attention mechanisms in neural networks can provide insights into which parts of the input data the model focused on during its decision-making process. These methods aim to bridge the gap between the complexity of AI models and the need for human understanding.

AI’s next big leap needs to understand nuanced consumer behavior, and that’s where things get really interesting. Check out this article on how India’s consumers are changing how they buy , because adapting to these shifts is crucial for AI to truly thrive in personalized marketing and predictive analytics. Ultimately, understanding evolving consumer preferences is key to building truly breakthrough AI.

Applications Where Explainability is Crucial

Explainability is particularly crucial in high-stakes domains where the consequences of incorrect decisions can be severe. In healthcare, for example, explainable AI models can help doctors understand why an AI system diagnosed a patient with a particular condition, allowing them to validate the diagnosis and make informed treatment decisions. Similarly, in finance, explainable AI models can be used to assess credit risk, detect fraud, or provide personalized financial advice, with the transparency needed to ensure fairness and accountability.

In these sensitive areas, understanding the reasoning behind AI’s decisions is not just desirable, but essential.

Techniques for Visualizing and Interpreting AI Models

Visualizing the internal workings of AI models is a key aspect of XAI. Various techniques can be employed, depending on the type of model and the desired level of detail. For instance, decision trees can be visualized as diagrams, clearly showing the decision paths and the features used at each node. Heatmaps can highlight the importance of different features in influencing a model’s prediction.

Saliency maps can visually represent the areas of an image that were most influential in a model’s classification. These visualization techniques provide valuable insights into the model’s behavior, making it easier to understand its strengths and weaknesses. For example, a heatmap applied to a medical image analysis might show which regions of the image were most crucial for a diagnostic decision, aiding a radiologist in their assessment.

Another example would be visualizing a decision tree used in a loan application process, clearly showing the factors influencing loan approval or denial.

Ethical Considerations and Societal Impact: The Breakthrough Ai Needs

The rapid advancement of AI presents us with unprecedented opportunities, but also significant ethical challenges. The potential benefits are immense, but so are the risks if we fail to address the societal implications proactively. Ignoring these ethical considerations could lead to unforeseen and potentially catastrophic consequences, undermining the very progress we seek to achieve. A responsible approach requires careful consideration of the potential harms and the development of robust mitigation strategies.

Advanced AI systems, while offering incredible potential for progress in various sectors, pose substantial risks to society. These risks aren’t merely hypothetical; they are emerging in real-time, demanding immediate attention and proactive solutions. Understanding these risks is crucial for guiding responsible development and deployment.

Societal Risks Associated with Advanced AI Systems

The deployment of sophisticated AI systems presents several significant societal risks. Job displacement due to automation is a major concern, particularly in sectors heavily reliant on repetitive tasks. This necessitates proactive strategies for workforce retraining and adaptation to the changing job market. Furthermore, the amplification of existing societal biases embedded within training data can lead to discriminatory outcomes, perpetuating and even exacerbating inequalities.

Algorithmic bias, for example, can manifest in loan applications, hiring processes, and even criminal justice systems, resulting in unfair or unjust treatment of specific demographic groups. Another key concern is the potential for misuse of AI, including the development of autonomous weapons systems and the spread of misinformation through sophisticated deepfakes.

Strategies for Mitigating Risks and Ensuring Responsible AI Development

Mitigating the risks associated with advanced AI requires a multi-pronged approach. Firstly, investing in education and retraining programs is crucial to help workers adapt to the changing job market. This includes providing opportunities for acquiring new skills relevant to the AI-driven economy. Secondly, rigorous testing and auditing of AI systems for bias are essential. This involves developing methods to identify and mitigate biases embedded in algorithms and datasets, ensuring fairness and equity in AI applications.

Thirdly, promoting transparency and explainability in AI decision-making is vital to build trust and accountability. This involves developing techniques to make AI systems more understandable and interpretable, allowing users to understand the reasoning behind AI-driven decisions. Finally, fostering collaboration between researchers, policymakers, and industry stakeholders is crucial to establish ethical guidelines and regulations for AI development and deployment.

The Need for Regulations and Ethical Guidelines

The absence of clear regulations and ethical guidelines for AI research and deployment creates a significant void. Without a robust framework, the potential for misuse and unintended consequences is greatly amplified. Establishing international standards and collaborations is crucial to ensure consistent and effective oversight of AI technologies. These guidelines should address issues such as data privacy, algorithmic transparency, and accountability for AI-driven decisions.

The regulatory framework should also encourage innovation while preventing harm, fostering a balance between progress and responsible development. Examples of existing initiatives include the EU’s AI Act and various national-level efforts focused on ethical AI frameworks.

A Framework for Assessing the Ethical Implications of a Specific AI Application

A comprehensive framework for assessing the ethical implications of a specific AI application should consider several key factors. This framework should evaluate the potential for bias in the data used to train the AI system, assess the potential for job displacement and economic disruption, examine the potential for misuse or malicious use of the technology, and analyze the impact on privacy and data security.

Furthermore, the framework should consider the potential for the AI system to perpetuate or exacerbate existing social inequalities, and evaluate the system’s overall societal impact, including both its potential benefits and harms. This framework would serve as a guide for developers and stakeholders to proactively address potential ethical concerns before deployment. For example, before deploying an AI-powered hiring tool, a thorough assessment would involve analyzing the data for biases, testing the system’s fairness across different demographic groups, and establishing mechanisms for human oversight and appeal.

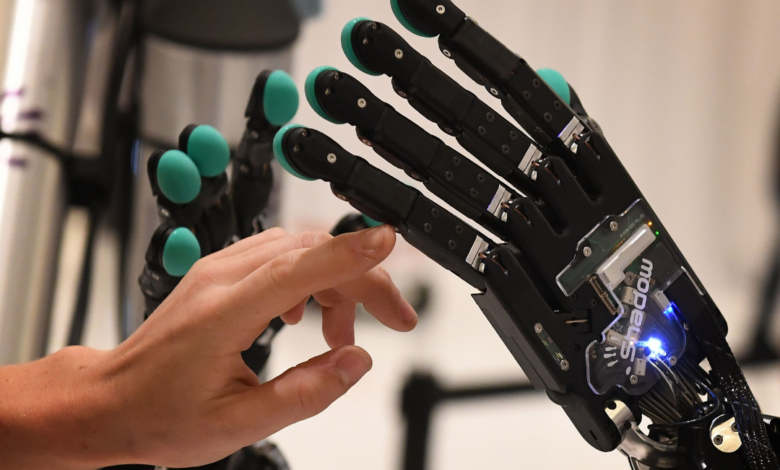

Human-AI Collaboration

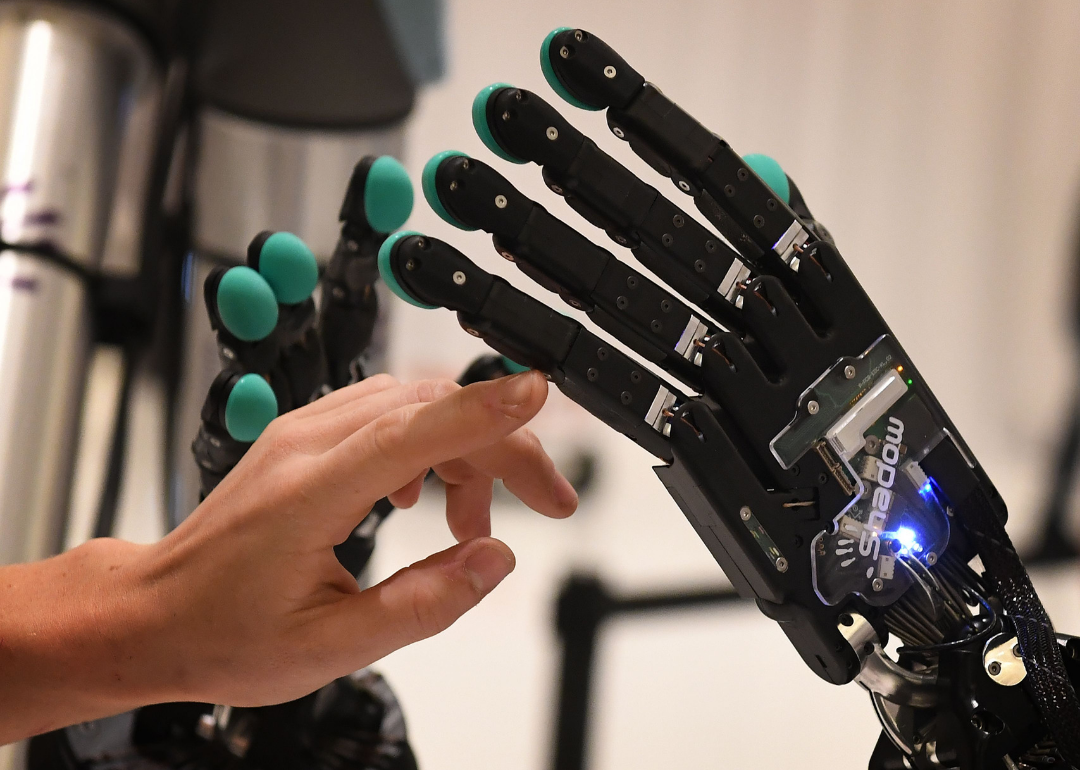

The future of AI isn’t about machines replacing humans, but about machines augmenting human capabilities. Effective human-AI collaboration is crucial for harnessing the full potential of artificial intelligence, leading to more efficient workflows, innovative solutions, and improved decision-making across various sectors. Designing systems that foster seamless interaction between humans and AI is paramount for realizing this potential.Designing AI systems for effective collaboration requires a deep understanding of human cognitive processes and limitations.

It’s not enough to simply create powerful algorithms; we must also consider how those algorithms are presented to, and interacted with, by human users. Intuitive interfaces and clear communication are essential for building trust and ensuring that humans can effectively utilize the AI’s capabilities.

Intuitive AI Interfaces

Creating intuitive AI interfaces involves prioritizing user experience. This means designing interfaces that are simple to understand and use, regardless of the user’s technical expertise. Clear visual representations of data, concise feedback mechanisms, and the ability to easily adjust AI parameters are all key components. For example, instead of presenting complex algorithms in raw code, an interface might use interactive dashboards displaying key insights visually, allowing users to understand patterns and trends without needing programming knowledge.

Natural language processing (NLP) plays a crucial role, enabling users to interact with AI systems through conversational interfaces, making the interaction feel more natural and less technical. Consider a medical diagnosis system where a doctor can input symptoms in natural language, and the system responds with probable diagnoses and suggested tests in a clear, easily understandable format, rather than complex statistical outputs.

Examples of Successful Human-AI Collaboration

Human-AI collaboration is already transforming various fields. In healthcare, AI-powered diagnostic tools assist doctors in identifying diseases earlier and more accurately. Radiologists, for example, can use AI to detect subtle anomalies in medical images that might be missed by the human eye, leading to faster and more accurate diagnoses. In manufacturing, robots collaborate with human workers on assembly lines, performing repetitive tasks while humans focus on more complex and creative aspects of the production process.

This improves efficiency and reduces the risk of repetitive strain injuries for human workers. Furthermore, in scientific research, AI algorithms are used to analyze vast datasets, identifying patterns and insights that would be impossible for humans to detect manually, accelerating the pace of discovery in fields like genomics and materials science.

Design of a Human-AI Collaborative System for Fraud Detection

Let’s consider a human-AI collaborative system for fraud detection in financial transactions. The system would consist of an AI component trained on a large dataset of historical transactions, identifying patterns indicative of fraudulent activity. The AI would flag suspicious transactions, providing a risk score and highlighting relevant features. However, the final decision on whether a transaction is fraudulent would rest with a human analyst.

The interface would present the flagged transactions in a user-friendly dashboard, displaying the risk score, relevant transaction details, and the AI’s reasoning behind flagging the transaction. The human analyst can then review the AI’s findings, investigate further, and make the final decision, overriding the AI’s assessment if necessary. This system leverages the AI’s ability to process vast amounts of data quickly and identify subtle patterns, while retaining human oversight to ensure accuracy and address edge cases that the AI might miss.

This collaborative approach combines the strengths of both human judgment and AI processing power, resulting in a more robust and effective fraud detection system.

The journey towards truly transformative AI requires a multifaceted approach. It’s not enough to simply build more powerful computers; we need smarter algorithms, better data, and a deep understanding of the ethical implications. By tackling these challenges head-on—through innovation in hardware, software, data management, and ethical frameworks—we can pave the way for an AI future that is not only powerful but also responsible and beneficial for all.